C# Concurrency

Asynchronous and multithreaded programming

©2025 by Manning Publications Co. All rights reserved.

dedication

To my wonderful wife, Analia

contents

Part 1. Asynchronous programming and multithreading basics

1 Asynchronous programming and multithreading

1.2 Introducing multicore CPUs

1.4 Using multithreading and asynchronous programming together

1.5 Software efficiency and cloud computing

2 The compiler rewrites your code

2.1 Lambda functions

2.2 Yield return

3 The async and await keywords

3.1 Asynchronous code complexity

3.2 Introducing Task and Task<T>

3.3 How does async/await work?

3.5 ValueTask and ValueTask<T>

3.6 What about multithreading?

4.1 Different ways to run in another thread

4.2 Accessing the same variables from multiple threads

4.3 Special considerations for native UI apps

4.4 Waiting for another thread

4.5 Other synchronization methods

4.6 Thread settings

5 async/await and multithreading

5.1 Asynchronous programming and multithreading

5.2 Where does code run after await?

5.4 UI threads

6.1 Asynchronous benefits on servers

6.2 Asynchronous benefits on native client applications

6.3 The downside of async/await

Asynchronous programming is contagious

Asynchronous programming has more edge cases

Multithreading has even more edge cases

7 Classic multithreading pitfalls and how to avoid them

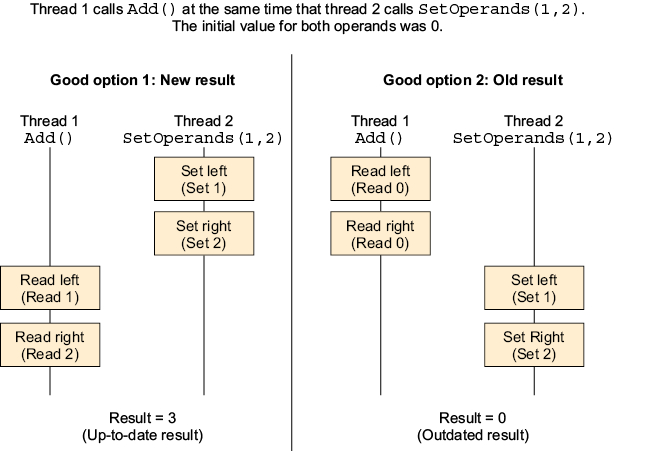

7.1 Partial updates

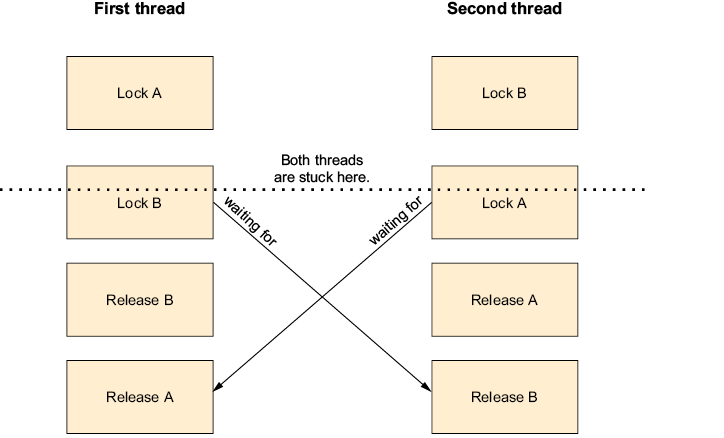

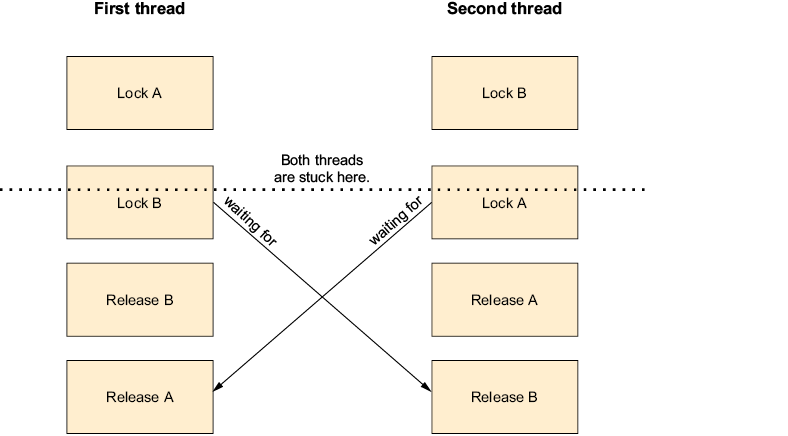

7.3 Deadlocks

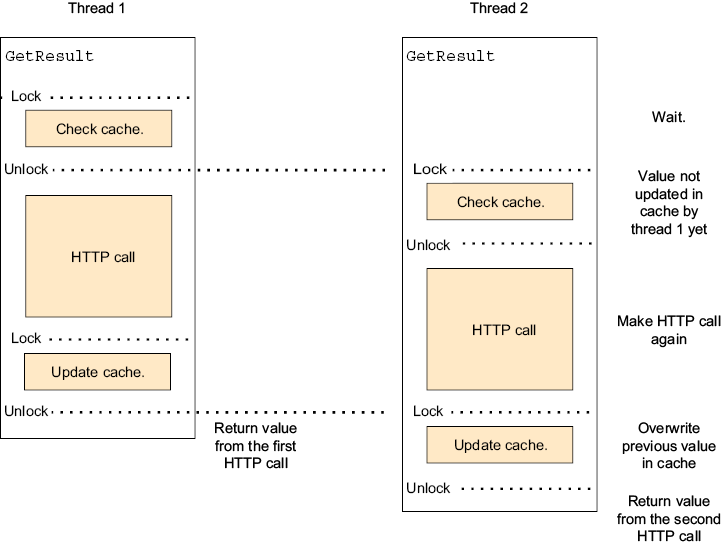

7.4 Race conditions

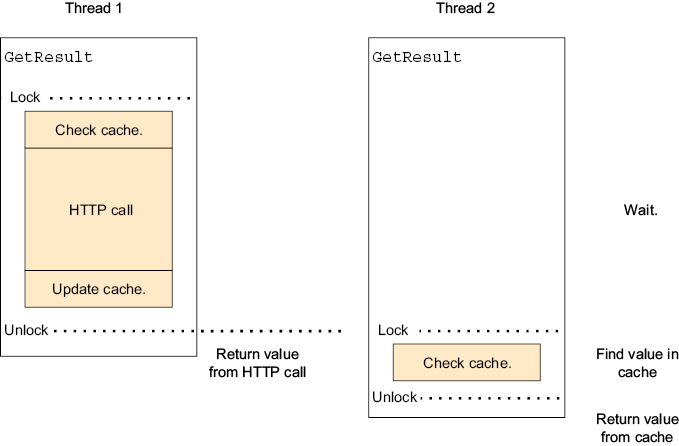

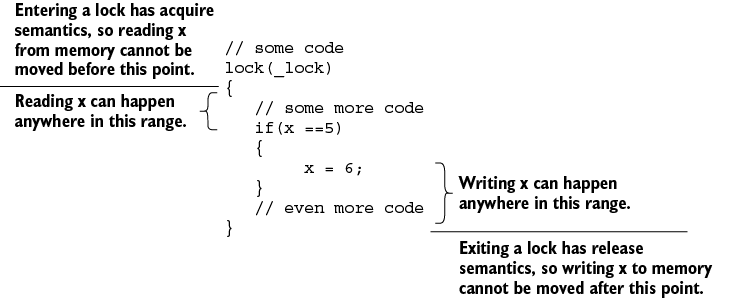

7.5 Synchronization

7.6 Starvation

Part 2. Advanced uses of async/await and multithreading

8 Processing a sequence of items in the background

8.1 Processing items in parallel

Processing items in parallel with the Thread class

Processing items in parallel with the thread pool

Asynchronously processing items in parallel

8.2 Processing items sequentially in the background

Processing items sequentially in the background with the Thread class

The work queue pattern and BlockingCollection

Processing important items with persistent queues

9.1 Introducing CancellationToken

9.2 Canceling using an exception

9.3 Getting a callback when the caller cancels our operation

9.5 Combining cancellation methods

9.6 Special cancellation tokens

10.1 Introducing TaskCompletionSource

10.2 Choosing where continuations run

10.3 Example: Waiting for initialization

10.4 Example: Adapting old APIs

10.5 Old-style asynchronous operations (BeginXXX, EndXXX)

10.6 Example: Asynchronous data structures

11 Controlling on which thread your asynchronous code runs

11.3 Breaking away—ConfigureAwait(false)

11.4 More ConfigureAwait options

11.5 Letting other code run: Task.Yield

11.6 Task schedulers

12.1 Exceptions and asynchronous code

12.2 await and AggregateException

12.3 The case of the lost exception

12.4 Exceptions and async void methods

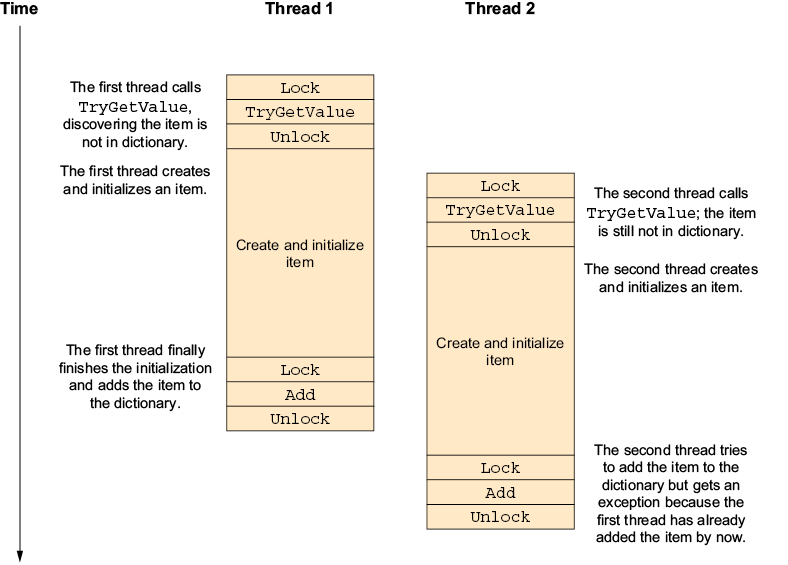

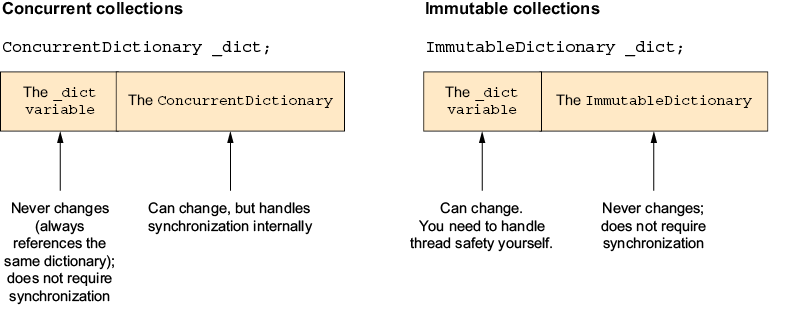

13.1 The problems with using regular collections

13.2 The concurrent collections

ConcurrentDictionary<TKey,TValue>

Async alternatives for BlockingCollection

ConcurrentQueue<T> and ConcurrentStack<T>

When to use the concurrent collections

When not to use the concurrent collections

13.3 The immutable collections

How immutable collections work

How to use the immutable collections

ImmutableDictionary<TKey,TValue>

ImmutableHashSet<T> and ImmutableSortedSet<T>

ImmutableQueue<T> and ImmutableStack<T>

When to use the immutable collections

When to use the frozen collections

14 Generating collections asynchronously/await foreach and IAsyncEnumerable

14.1 Iterating over an asynchronous collection

14.2 Generating an asynchronous collection

14.3 Canceling an asynchronous collection

14.4 Other options

14.5 IAsyncEnumerable<T> and LINQ

14.6 Example: Iterating over asynchronously retrieved data

14.7 Example: BlockingCollection<T>-like asynchronous queue

front matter

preface

I’ve been a software developer for over 30 years now and have been developing high-performance servers using multithreading and asynchronous programming since the late 1990s. I’ve been using C# since 2003. For the last decade and a bit, I’ve worked as a consultant, coming into a project for a short period of time and helping solve a specific problem. Over that decade, I’ve had the privilege of visiting many companies, and I’ve gotten to see and help with a lot of projects.

While every project is obviously completely different, with each company inventing its own innovative, disruptive, and one-of-a-kind technology, after you encounter enough projects, you start to see some similarities. And one thing I’ve seen time and time again are problems arising from incorrect usage of multithreading and asynchronous programming.

Multithreading is a straightforward concept: it involves running multiple tasks simultaneously. It is notoriously difficult to get it right, but despite this difficulty, it has been widely used for a long time. Developers like you, who take the time to study multithreading through books, are able to use it effectively.

Asynchronous programming has existed since the invention of the microprocessor and has long been used in high-performance servers. However, it gained wider popularity among average developers when the async/await feature was introduced in C# in 2012. (It was introduced in JavaScript earlier, but in a limited way.) Based on my observations of various projects and my experience conducting job interviews, I’ve found that very few people understand how async/await works.

The problems arising from a lack of knowledge in multithreading and asynchronous programming are quite apparent. In just the month or so that I discussed publishing this book with Manning, I taught multithreading and async/await at three different companies.

And this is how this book was born. What followed was a little more than two years of very deep diving into multithreading and asynchronous programming in C#. During this time, I’ve learned a lot. There is truly no better way to learn something than teaching it, and I hope this book will be at least as beneficial to you as writing it was to me.

acknowledgments

I truly believe this is a very good book, but I didn’t write it alone. Writing a book is a team effort, and it takes an enormous amount of work by many people. Without all those people, this book wouldn’t be as good and, most likely, it wouldn’t exist at all.

First, I want to thank my development editor at Manning, Doug Rudder, who had the patience to teach this first-time author how to write a technical book. Associate publisher Mike Stephens, who agreed to publish my idea of a book, helped with support and feedback. Using a food analogy in the first chapter was his idea. And technical editor Paul Grebenc was the first line of defense against technical mistakes. Paul is a Principal Software Developer at OpenText. He has over 25 years of professional experience in software development, working primarily with C# and Java. His primary interests are systems involving multithreading, asynchronous programming, and networking.

Next, I also want to thank all the reviewers who reviewed drafts of this book and everyone who commented while the book was in MEAP: your comments have been invaluable to improving the book. To all the reviewers—Aldo Biondo, Alexandre Santos Costa, Allan Tabilog, Amrah Umudlu, Andriy Stosyk, Barry Wallis, Chriss Barnard, David Paccoud, Dustin Metzgar, Geert Van Laethem, Jason Down, Jason Hales, Jean-Paul Malherbe, Jeff Shergalis, Jeremy Caney, Jim Welch, Jiří Činčura, Joe Cuevas, Jonathan Blair, Jort Rodenburg, Jose Antonio Martinez, Julien Pohie, Krishna Chaitanya Anipindi, Marek Petak, Mark Elston, Markus Wolff, Mikkel Arentoft, Milorad Imbra, Oliver Korten, Onofrei George, Sachin Handiekar, Simon Seyag, Stefan Turalski, Sumit Singh, and Vincent Delcoigne—your suggestions helped make this book better.

I also want to give my personal thanks to everyone who bought the book while in early access. Seeing that people are interested enough to spend their hard-earned money on a book I wrote is a wonderful feeling, and it was an important part of the motivation to complete the book.

And last, but most important, I want to thank my family, and especially my wife, who put up with all my nonsense in general and, in particular, with me spending a lot of our free time in my office writing.

about this book

This book is designed to help C# developers write safe and efficient multithreaded and asynchronous application code. It focuses on practical techniques and features you are likely to encounter in normal day-to-day software development.

It delves into all the details you need to know to write and debug multithreaded and asynchronous code. It leaves out the exotic, fun techniques that are only applicable if you need to build something like your own database server, but that are too complicated for normal application code and will probably get you into trouble if you try to use them in normal code, because normal multithreading is difficult enough as it is.

Who should read this book

This book is for any C# developer who wants to improve their knowledge of multithreading and asynchronous programming. The information in this book is applicable to any version of .NET, .NET Core, and .NET Framework released since 2012 and to both Windows and Linux (obviously only for .NET Core and .NET 5 and later, since earlier versions do not support Linux).

The book focuses more on backend development but also covers what you need to know to write UI applications.

How this book is organized: A road map

This book has two parts that include 14 chapters.

Part 1 covers the basics of multithreading and async/await in C#:

-

Chapter 1 introduces the concepts and terminology of multithreading and asynchronous programming.

-

Chapter 2 covers the techniques that the .NET compiler uses to implement advanced functionality.

-

Chapter 3 is a deep dive into how

async/awaitworks. -

Chapter 4 explains multithreading.

-

Chapter 5 ties chapters 3 and 4 together and shows how

async/awaitinteracts with multithreading. -

Chapter 6 talks about when you should use

async/await—just because you can use it doesn’t mean you should use it everywhere. -

Chapter 7 closes the first part with information about the common multithreading pitfalls, and more importantly, what you have to do to avoid them.

Part 2 is about how to use the information you learned about in part 1:

-

Chapter 8 is about processing data in the background.

-

Chapter 9 is about stopping background processing.

-

Chapter 10 teaches how to build advanced asynchronous components that do more than just combine built-in asynchronous operations.

-

Chapter 11 discusses advanced use cases of

async/awaitand threading. -

Chapter 12 helps you debug a problem with exceptions in asynchronous code.

-

Chapter 13 goes over thread-safe collections.

-

Chapter 14 shows how you can build things that work like asynchronous collections yourself.

About the code

This book contains many examples of source code both in numbered listings and in line with normal text. In both cases, source code is formatted in a fixed-width font like this to separate it from ordinary text. Sometimes code is also in bold to highlight code that has changed from previous steps in the chapter, such as when a new feature adds to an existing line of code.

In many cases, the original source code has been reformatted; we’ve added line breaks and reworked indentation to accommodate the available page space in the book. In rare cases, even this was not enough, and listings include line-continuation markers (➥). Additionally, comments in the source code have often been removed from the listings when the code is described in the text. Code annotations accompany many of the listings, highlighting important concepts.

You can get executable snippets of code from the liveBook (online) version of this book at https://livebook.manning.com/book/csharp-concurrency.

Source code for the examples in this book is available for download from https://github.com/nirdobovizki/AsynchronousAndMultithreadedProgrammingInCSharp and the author web site at https://nirdobovizki.com. The complete code for the examples in the book is also available for download from the Manning website at https://www.manning.com/books/csharp-concurrency.

liveBook discussion forum

Purchase of C# Concurrency includes free access to liveBook, Manning’s online reading platform. Using liveBook’s exclusive discussion features, you can attach comments to the book globally or to specific sections or paragraphs. It’s a snap to make notes for yourself, ask and answer technical questions, and receive help from the author and other users. To access the forum, go to https://livebook.manning.com/book/csharp-concurrency/discussion. You can also learn more about Manning’s forums and the rules of conduct at https://livebook.manning.com/discussion.

Manning’s commitment to our readers is to provide a venue where a meaningful dialogue between individual readers and between readers and the author can take place. It is not a commitment to any specific amount of participation on the part of the author, whose contribution to the forum remains voluntary (and unpaid). We suggest you try asking the author some challenging questions lest his interest stray! The forum and the archives of previous discussions will be accessible from the publisher’s website as long as the book is in print.

about the author

Nir Dobovizki is a software architect and a senior consultant. He’s worked on concurrent and asynchronous systems, mostly high-performance servers, since the late 1990s. He’s used both in native code and, since the introduction of .NET 1.1 in 2003, .NET and C#. He has worked with multiple companies in the medical, defense, and manufacturing industries to solve problems arising from incorrect usage of multithreading and asynchronous programming.

about the cover illustration

The figure on the cover of C# Concurrency is “Homme Tatar de Tobolsk” or “Tatar man from Tobolsk,” taken from a collection by Jacques Grasset de Saint-Sauveur, published in 1788. Each illustration is finely drawn and colored by hand.

In those days, it was easy to identify where people lived and what their trade or station in life was just by their dress. Manning celebrates the inventiveness and initiative of the computer business with book covers based on the rich diversity of regional culture centuries ago, brought back to life by pictures from collections such as this one.

Part 1. Asynchronous programming and multithreading basics

The first part of this book covers asynchronous programming and multi-threading in C#, explaining what they are and how to implement them. This part highlights common pitfalls and provides guidance on how to avoid them.

We start with the concepts and terminology of multithreading and asynchronous programming, as used in computer science generally and in C# specifically (chapter 1). Next, we’ll dive right into how asynchronous programming with async/await works in C# (chapters 2 and 3). Then, we’ll discuss multithreading in C# (chapter 4) and how multithreading and asynchronous programming work together (chapter 5). Finally, we’ll talk about when to use async/await (chapter 6) and how to use multithreading properly (chapter 7).

By the end of part 1, you will learn how to write correct multithread code and use async/await properly.

1 Asynchronous programming and multithreading

- Introduction to multithreading

- Introduction to asynchronous programming

- Asynchronous programming and multithreading used together

As software developers, we often strive to make our applications faster, more responsive, and more efficient. One way to achieve this is by enabling the computer to perform multiple tasks simultaneously, maximizing the use of existing CPU cores. Multithreading and asynchronous programming are two techniques commonly used for this task.

Multithreading allows a computer to appear as if it is executing several tasks at once, even when the number of tasks exceeds the number of CPU cores. In contrast, asynchronous programming focuses on optimizing CPU usage during operations that would typically make it wait, which ensures the CPU remains active and productive.

Enabling a computer to perform multiple tasks simultaneously is extremely useful. It helps keep native applications responsive while they work and is essential for building high-performance servers that can interact with many clients at the same time.

Both techniques can be employed to create responsive client applications or servers that handle a few clients. But when combined, they can greatly boost performance, allowing servers to handle thousands of clients at once.

This chapter will introduce you to multithreading and asynchronous programming and illustrate why they are important. In the rest of the book, we’ll talk about how to use them correctly in .NET and C#, especially focusing on the C# async/await feature. You will learn how these technologies work, go over the common pitfalls, and see how to use them correctly.

1.1 What is multithreading?

Before we begin talking about async/await, we need to understand what multithreading and asynchronous programming are. To do so, we are going to talk a bit about web servers and pizza making. We’ll start with the pizza (because it’s tastier than a web server).

The high-level process of pizza making in a takeout place is typically as follows:

-

The cook receives an order.

-

The cook does stuff—takes preprepared dough, shapes it, and adds sauce, cheese, and toppings.

-

The cook places the pizza in the oven and waits for it to bake (this is the longest bit).

-

The cook then does more stuff—takes the pizza out of the oven, cuts it, and places it in a box.

-

The cook hands the pizza to the delivery person.

This is not a cookbook, so obviously, our pizza baking is a metaphor for one of the simplest server scenarios out there—a web server serving static files. The high-level process for a simple web server is as follows:

-

The server receives a web request.

-

The server performs some processing to figure out what needs to be done.

-

The server reads a file (this is the longest bit).

-

The server does some more processing (such as packaging the file content).

-

The server sends the file content back to the browser.

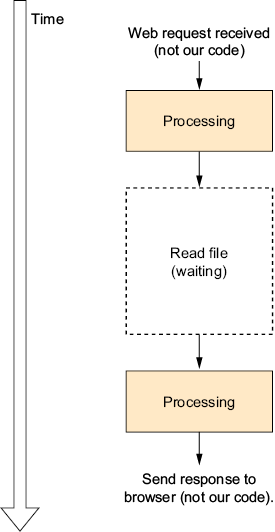

For most of the chapter, we are going to ignore the first and last steps because, in most backend web frameworks (including ASP.NET and ASP.NET Core), they are handled by the framework and not by our code. We will talk about them briefly near the end of this chapter. Figure 1.1 illustrates the web request process.

Figure 1.1 Single-threaded, single-request flow

Now back to the pizza. In the simplest case, the cook will follow the steps in order, completely finishing one pizza before starting the next one. While the pizza is baking, the cook will just stand there staring at the oven and do nothing (this is a fully synchronous single-threaded version of the process).

In the world of web servers, the cook is the CPU. In this single-threaded web server, we have straightforward code that performs the operations required to complete the web request, and while the file is read from disk, the CPU is frozen doing nothing (in practice, the operating system will suspend our thread while this happens and hand over the CPU to another program, but from our program point of view, it looks like the CPU is frozen).

This version of the process has some advantages—it is simple and easy to understand. You can look at the current step and know exactly where we are in the process. As two things are never taking place at the same time, different jobs can’t interfere with each other. Finally, this version requires the least amount of space and uses fewer resources at any one time because we only handle one web request (or pizza) at a time.

This single-threaded synchronous version of the process is apparently wasteful because the cook/CPU spends most of their time doing nothing while the pizza is baking in the oven (or the file is retrieved from disk), and if our pizzeria isn’t going out of business, we are going to receive new orders faster than we can fulfill them.

For this reason, we want the cook to make more than one pizza at the same time. One approach might be to use a timer and have it beep every few seconds. Every time the timer beeps, the cook will stop whatever they are doing and make a note of what they did when they stopped. The cook will then start a new pizza or continue making the previous one (ignoring the unready pizzas in the oven) until the timer beeps again.

In this version, the cook is attempting to do multiple things at the same time, and each of those things is called a thread. Each thread represents a sequence of operations that can happen in parallel with other similar or different sequences.

This example may seem silly, as it is obviously inefficient, and our cook will spend too much time putting things away and picking up stuff. Yet this is exactly how multithreading works. Inside the CPU, there’s a timer that signals when the CPU should switch to the next thread, and with every switch, the CPU needs to store whatever it was doing and load the other thread’s status (this is called a context switch).

For example, when your code reads a file, the thread can’t do anything until the file’s data is retrieved from disk. During this time, we say the thread is blocked. Having the system allocate CPU time to a blocked thread would obviously be wasteful, so when a thread begins reading a file, it is switched to a blocked state by the operating system. When entering this state, the thread will immediately release the CPU to the next waiting thread (possibly from another program), and the operating system will not assign any CPU time to the thread while in this state. When the system finishes reading the file, the thread exits the blocked state and is again eligible for CPU time.

The operations that can cause the thread to become blocked are called blocking operations. All file and network access operations are blocking, as is anything else that communicates with anything outside the CPU and memory; moreover, all operations that wait for another thread can block.

Back in the pizzeria, in addition to the time we spend switching between pizzas, there’s also all the information the cook needs to get back to exactly the same place they were before switching tasks. In our software, every thread, even if not running, consumes some memory, so while it’s possible to create a large number of threads, each of them executing a blocking operation (so they are blocked most of the time and not consuming CPU time), this is wasteful of memory. It will slow the program down as we increase the number of threads because we must manage all the threads. At some point, we will either spend so much time managing threads that no useful work will get done or we will just run out of memory and crash.

Even with all this inefficiency, the multithreading cook, who jumps from one pizza to another like a crazy person, will make more pizzas in the same amount of time, unless they can’t make progress or crash (I know, a cook can’t crash; no metaphor is perfect). This mostly happens because the single-threaded cooks from before spent most of their time waiting while the pizza was in the oven.

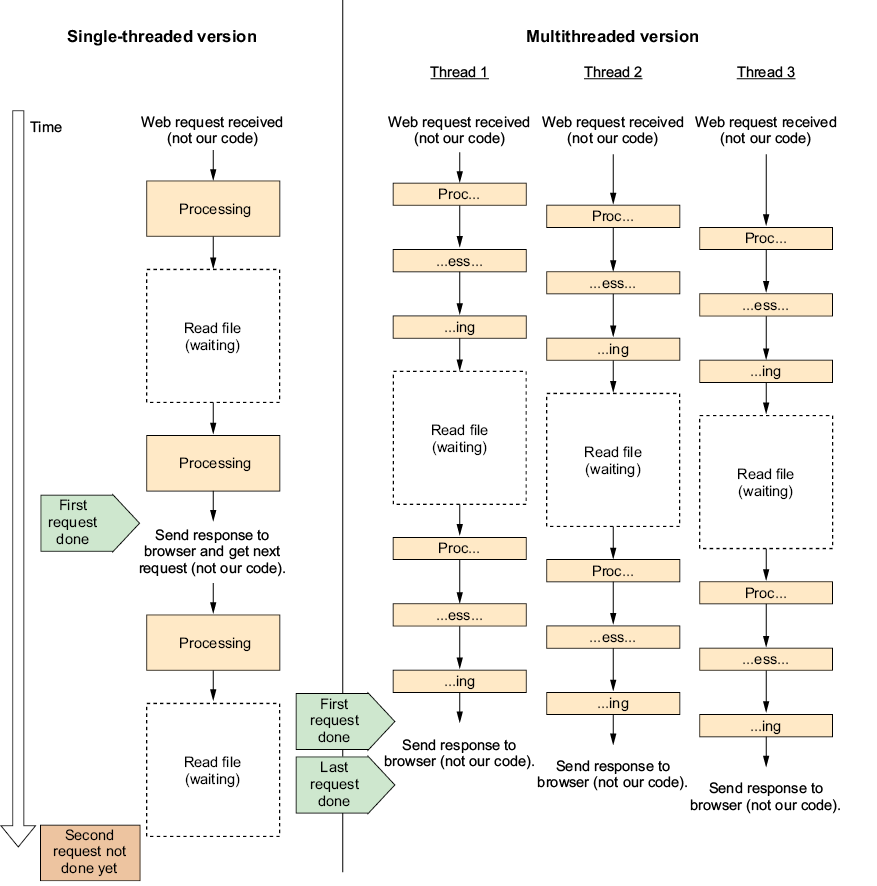

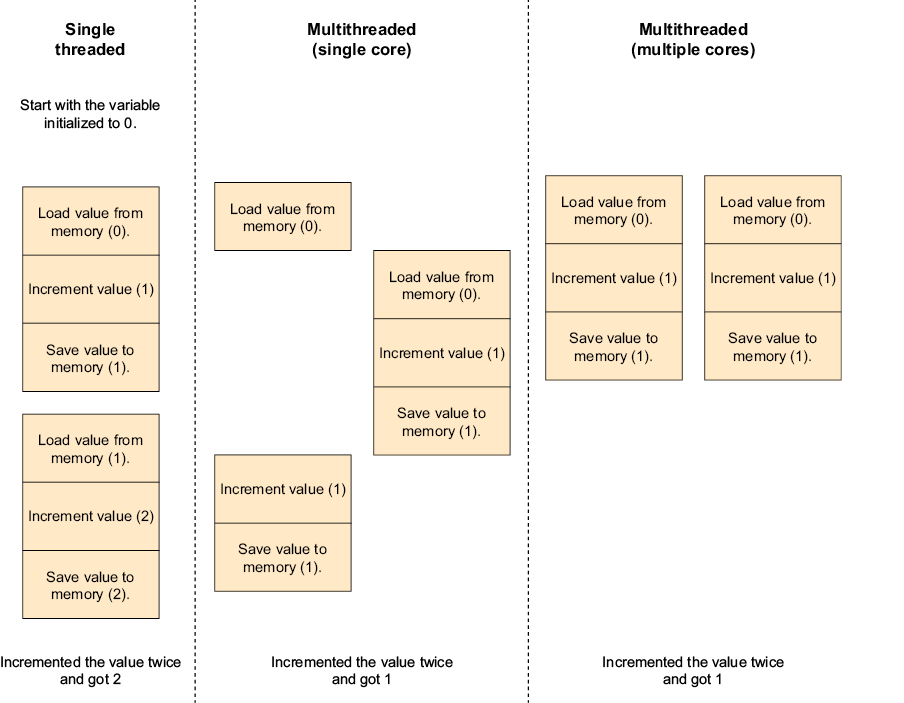

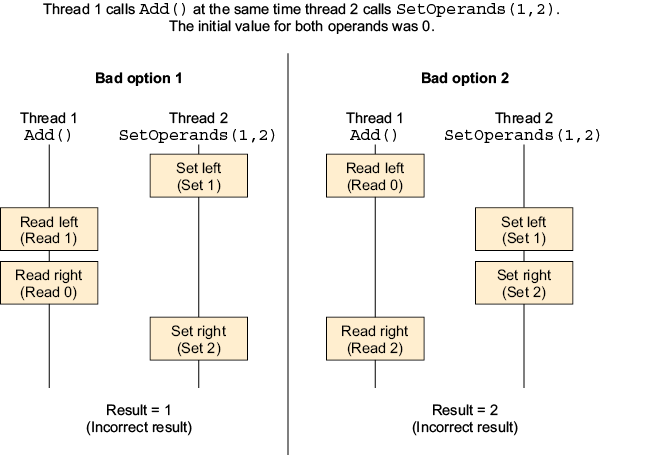

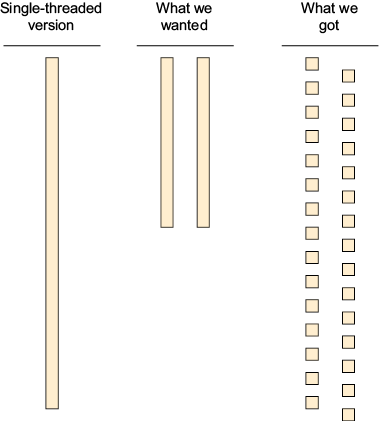

As illustrated in figure 1.2, because we only have one CPU core (I know, everyone has multicore CPUs nowadays; we’ll talk about them soon), we can’t really do two things simultaneously. All the processing parts happen one after the other and are not truly in parallel; however, the CPU can wait as many times as you like in parallel. And that’s why our multithread version managed to process three requests in significantly less time than it took the single-threaded version to process two.

Figure 1.2 Single-threaded versus multithread with multiple requests

If you look closely at figure 1.2, you can see that while the single-threaded version handled the first request faster, the multithreaded version completed all three before the single-threaded version managed to complete the second request. This shows us the big advantage of multithreading, which is a much better utilization of the CPU in scenarios that involve waiting. It also shows the price we pay—just a little bit of extra overhead every step of the way.

Until now, we’ve talked about single-core CPUs, but all modern CPUs are multicore. How does that change things?

1.2 Introducing multicore CPUs

Multicore CPUs are conceptually simple. They are just multiple single-core CPUs packed into the same physical chip.

In our pizzeria, having an eight-core CPU is equivalent to having eight cooks carrying out the pizza-making tasks. In the previous example, we had one cook who could only do one thing at a time but pretended to do multiple things by switching between them quickly. Now we have eight cooks, each able to do one task at the same time (for a total of eight tasks at once), and each pretending to do multiple things by switching between tasks quickly.

In software terms, with multicore CPUs, you can really have multiple threads running simultaneously. When we had a single-core CPU, we sliced the work into tiny parts and interleaved them to make it seem like they were running at the same time (while, in fact, only one thing could run). Now, with our example eight-core CPU, we still slice the work into tiny parts and interleave them, but we can run eight of those parts at the same time.

Theoretically, eight cooks can make more pizzas than only one; however, multiple cooks may unintentionally interfere with each other’s work. For example, they might bump into each other, try to put a pizza in the oven at the same time, or need to use the same pizza cutter—the more cooks we have, the greater the chance of this happening.

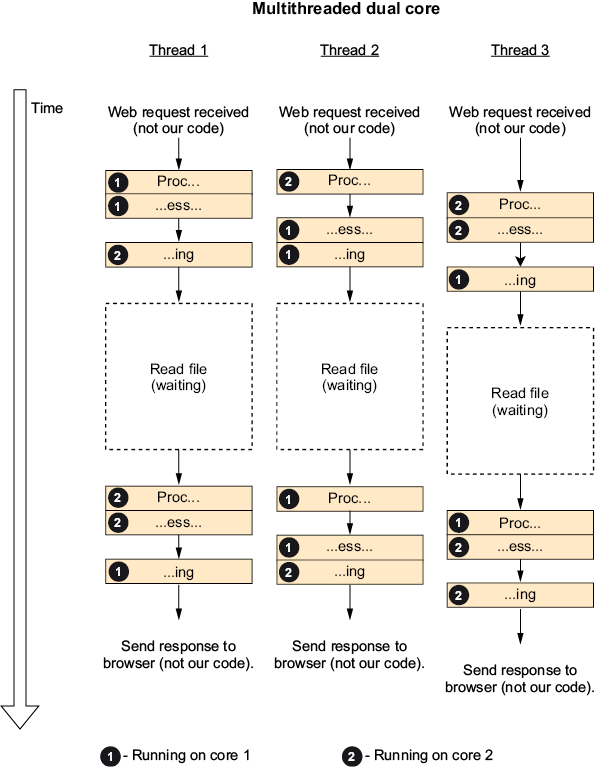

Figure 1.3 takes the same multithreaded work we had in figure 1.2 and shows how it would run on a dual-core CPU (just two cores because a diagram with enough work for an eight-core CPU would be too big to illustrate here).

Figure 1.3 Three requests on a dual-core CPU

Note that by default, there is no persistent relation between threads and cores. A thread can jump between cores at any time (you can set threads to run on specific cores, which is called “thread affinity,” and except in really special circumstances, something you shouldn’t do).

The dual-core CPU cut the time we spent processing by half compared to the single-core version but didn’t affect the time we spent waiting. So while we did get a significant speedup, it did not cut the total time in half. Until now, we’ve gotten most of the performance improvement from doing other stuff while waiting for the hard drive to read the file, but we’ve paid for it with all the overhead and complexity of multithreading. Maybe we can reduce this overhead.

1.3 Asynchronous programming

Back in the pizzeria, there’s a rational solution we ignored. The cook should make a single pizza without stopping and switching to other pizzas, but when the pizza is in the oven, they can start the next pizza instead of just sitting there. Later, whenever the cook finishes something, they can check whether the pizza in the oven is ready, and if it is, they can take it out, cut it, put it in a box, and hand it over to the delivery person.

This is an example of asynchronous programming. Whenever the CPU needs to do something that happens outside the CPU itself (for example, reading a file), it sends the job to the component that handles it (the disk controller) and asks this component to notify the CPU when it’s done.

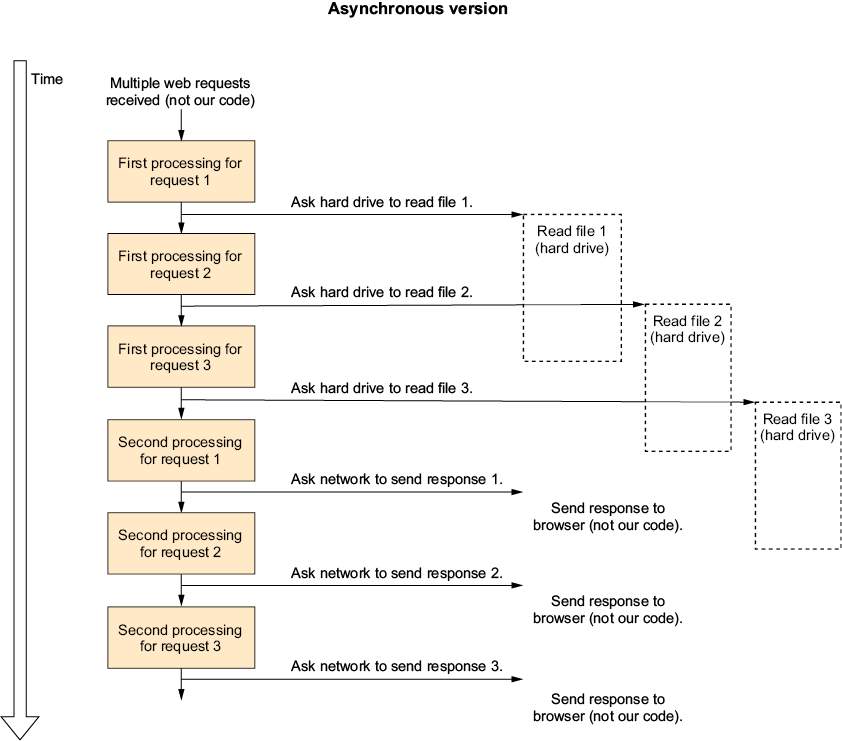

The asynchronous (also called nonblocking) version of the file function just queues the operation with the operating system (that will then queue it with the disk controller) and returns immediately, letting the same thread do other stuff instead of waiting (figure 1.4). Later, we can check whether the operation has been completed and access the resulting data.

If you compare all the diagrams in this chapter, you will see that this single-threaded asynchronous version is the fastest of all the options. It completes the first request almost as fast as the first single-threaded version while also completing the last request almost as fast as the dual-core multithreaded version (without even using a second core), which makes it the most performant version so far.

Figure 1.4 Single-threaded asynchronous web server with three web requests

You can also clearly see that figure 1.4 is kind of a mess and is more difficult to read than the previous diagrams, and that is even without indicating in the diagram that the “second processing” steps depend on completing the read operations. The thing that makes the diagram more difficult to understand is that you can no longer see the entire process; the work done for every request is broken up into parts, and unlike the threading example, those parts are not connected to each other.

This is the reason that while multithreading is widely used, until the introduction of async/await, asynchronous programming has only been used by people building high-performance servers (or using environments where you have no other choice; for example, JavaScript). Like in figure 1.4, the code must be broken into parts that are written separately, which made the code difficult to write and even more difficult to understand—until C# introduced the async/await feature that lets one write asynchronous code as if it were normal synchronous code.

Also, in figure 1.4, I indicated that I use the same asynchronous techniques as for reading the file when sending the response back to the browser. That’s because the first and last steps in our web request sequence, “get web request” and “send response to browser,” are both performed mostly by the network card and not the CPU, just like reading the file is done by the hard drive, so the two can be performed asynchronously without making the CPU wait.

Even with multithreading only, without asynchronous programming, it’s completely possible to write servers that can handle low and medium loads by opening a thread for every connection. However, if you need to build a server that can serve thousands of connections at the same time, the overhead of so many threads will slow the server down to the point of not being able to handle the load or will crash the server outright.

We talked about asynchronous programming as a way to avoid multithreading, but we can’t take advantage of the power of multicore CPUs without multithreading. Let’s see whether we can use multithreading and asynchronous programming jointly to get even more performance.

1.4 Using multithreading and asynchronous programming together

Let’s jump back to the pizzeria one last time. We can improve our pizza making even more: instead of having the cook actively check the oven, just make the oven beep when the pizza is ready, and when the oven beeps, the cook can stop what they are doing, take the pizza out, put it in a box, hand it over to the delivery person, and then get back to what they were doing.

The software equivalent is, when starting the asynchronous operation, to ask the operating system to notify our program by calling a callback function we registered when starting the asynchronous operation. That callback function will need to run on a new thread (actually, a thread pool thread; we will talk about the thread pool later in the book) because the original calling thread is not waiting and is currently doing something else. That’s why asynchronous programming and multithreading work well together.

1.5 Software efficiency and cloud computing

Today, we can just use our favorite cloud provider’s “serverless” option and run 10,000 copies of our single-threaded code at the same time. So do we need to bother with all this multithreaded and asynchronous code?

Well, theoretically, we can just throw a lot of processing power at the problem. With the modern cloud offerings, you can basically get infinite compute power whenever you want, but you do have to pay for it. Because you pay exactly for what you use, every bit of efficiency you get saves you money.

Before cloud computing, you would buy a server, and as long as you didn’t max out the server you bought, the efficiency of your code didn’t really matter. Today, shaving off a part of a second of every request in a high-load site can save a significant amount of money.

In the past, CPUs got faster all the time. The rule of thumb was that CPU speed doubled every two years, which meant that you could fix slow software by waiting a bit and buying a new computer. Unfortunately, this is no longer the case because the modern CPU got so close to the maximum number of transistors that can be put in a specific area that it is basically not possible to make a single core much faster. Consequently, the single-thread performance of CPUs now rises rather slowly, and our only choice to improve performance is to use more CPU cores (there’s a very influential paper called “The Free Lunch Is Over” by Herb Sutter covering this topic; see www.gotw.ca/publications/concurrency-ddj.htm).

Nonetheless, the modern CPU is still extremely fast, faster than other computer components, and obviously, much faster than any human. Therefore, a typical CPU spends most of its time waiting. Sometimes it’s waiting for user input, and other times, it’s waiting for the hard drive, but it’s still waiting. Multithreading and asynchronous programming enable employing this waiting time to do useful work.

Summary

-

Multithreading is switching between several things on the same CPU fast enough to make it feel like they are all running simultaneously.

-

A thread is one of those things running simultaneously.

-

A thread has significant overhead.

-

Switching between threads is called context switching, and it also has overhead.

-

When doing stuff that happens outside the CPU, such as reading a file or using a network, the thread must wait until the operation is complete to get the result and continue to operate on it, which is called a blocking operation.

-

Asynchronous programming frees up the thread while operations are taking place by asking the system to send a notification when the operation ends instead of waiting, which is called a nonblocking operation. The program then needs to pick up processing later when the data is available, usually on a different thread.

-

We need asynchronous and multithreading techniques because the complexity of our software grows faster than the single-thread performance of our CPUs.

-

Because in cloud computing we pay for the exact resources we use, asynchronous and multithreading techniques that increase efficiency can save us some money.

2 The compiler rewrites your code

- How the C# compiler supports features that do not exist in the .NET runtime

- The implementation of lambda functions by the compiler

- The implementation of

yield returnby the compiler

The compiler modifies your code, which means that the output is not a direct representation of the source code. This is done for two main reasons: to reduce the amount of typing required by boilerplate code generation and to add features not supported by the underlying platform. One such feature is async/await, which is primarily implemented by the C# compiler rather than the .NET runtime. To write correct asynchronous code, avoid the potential pitfalls, and especially to debug code, it’s important to understand how the compiler transforms your code, that is, what happens when your code runs.

This chapter discusses how the C# compiler rewrites your code during compilation. However, because async/await is probably the most complicated code transformation in the current version of C#, we’re going to start with lambda functions and yield return, which are implemented using the same techniques as async/await. By starting with simpler compiler features, we can learn the concepts behind async/await without having to deal with the complexities of asynchronous programming and multithreading. The next chapter will show how everything translates directly to async/await.

Now let’s see how the C# compiler adds advanced features not supported by the underlying .NET runtime, starting with lambda functions (note that the C# lambda functions have nothing to do with the Amazon AWS Lambda service).

2.1 Lambda functions

Let’s start with one of the simpler C# features implemented by the compiler—lambda functions. These functions are code blocks you can write inline, inside a larger method that can be used just like a standalone method. Lambda functions allow us to take code that, for technical reasons, needs to be a different method and write it in-line where it is used, making the code easier to read and understand. Lambda functions can also use local variables from the method that defined them.

However, the .NET runtime does not have in-line functions—all code in .NET must be in the form of methods that are part of classes. So how do lambda functions work? Let’s take a very simple example: we will create a timer, set it to call us in 1 second, and then write the string "Elapsed" to the console.

Listing 2.1 Using lambda functions

public class LambdaDemo1

{

private System.Timers.Timer? _timer;

public void InitTimer()

{

_timer = new System.Timers.Timer(1000);

_timer.Elapsed += (sender,args) => Console.WriteLine("Elapsed");

_timer.Enabled = true;

}

}

If we run this example, unsurprisingly, the program will print "Elapsed" after 1 second. The line I want you to focus on is the one that sets the _timer.Elapsed property. This line defines a lambda function and passes it the Elapsed property.

But I said that in .NET, all code must be in methods defined in classes, so how is this done? The answer is that the C# compiler rewrites your lambda function as a normal method. If you look at the compile output, it would be similar to

public class LambdaDemo2

{

private System.Timers.Timer? _timer;

private void HiddenMethodForLambda( ❶

object? sender, System.Timers.ElapsedEventArgs args)

{

Console.WriteLine("Elapsed");

}

public void InitTimer()

{

_timer = new System.Timers.Timer(1000);

_timer.Elapsed += HiddenMethodForLambda;

_timer.Enabled = true;

}

}

❶ The lambda function becomes a regular method.

The compiler rearranged our code and moved the body of the lambda function into a new method. That way, we can write the code inline, and the runtime can treat it as a normal method.

But the lambda function can also use local variables from the method that defined them. Let’s add a variable defined in the InitTimer method and used inside the lambda function.

Listing 2.2 Lambda function that uses local variables

public class LambdaDemo3

{

private System.Timers.Timer? _timer;

public void InitTimer()

{

int aVariable = 5; ❶

_timer = new System.Timers.Timer(1000);

_timer.Elapsed += (sender,args) => Console.WriteLine(aVariable);

_timer.Enabled = true;

}

}

❶ The new variable

If we try to apply the same transformation on this code like in the previous example, we will get two methods that share a local variable. This is obviously not supported and doesn’t even make sense. How can the compiler handle that? Well, it needs something that can hold data that can be accessed from two places, and we have such a thing in .NET: classes. So the compiler creates a class to hold our “local” variable:

public class LambdaDemo4

{

private System.Timers.Timer? _timer;

private class HiddenClassForLambda ❶

{

public int aVariable; ❷

public void HiddenMethodForLambda( ❸

object? sender, ❸

System.Timers.ElapsedEventArgs args) ❸

{ ❸

Console.WriteLine(aVariable); ❸

} ❸

}

public void InitTimer()

{

var hiddenObject = new HiddenClassForLambda();

hiddenObject.aVariable = 5;

_timer = new System.Timers.Timer(1000);

_timer.Elapsed += hiddenObject.HiddenMethodForLambda;

_timer.Enabled = true;

}

}

❶ The compiler creates a class for our lambda function.

❷ The local variable becomes a field of the class.

❸ The lambda function becomes a method inside that class.

Here, the compiler created a new method and an entirely new class. The local variable was moved to be a member of this class, and both the InitTimer method and the lambda function reference this new class member. This changes the way the local variable is accessed outside the lambda function—some operation that only used local variables can turn into access to class member fields when you introduce a lambda. If there are multiple lambda functions defined in the same method, they are placed in the same class so they can share local variables. The important point is that there is no magic here—everything the compiler adds to the .NET runtime is done by just writing code that we can write ourselves because we have basically the same access to the runtime’s functionality as the compiler.

Now that we’ve seen the lambda function transformation, let’s take a look at something a bit more complicated.

2.2 Yield return

The yield return feature uses the same tricks we’ve seen in the lambda function example to do even more advanced stuff. It’s also somewhat similar to async/await, but without the complexities of multithreading and asynchronous code, so it’s a good way to learn the fundamentals of async/await.

What is yield return? It basically lets you write functions that generate a sequence of values you can use in foreach loops directly without using a collection such as a list or an array. Each value can be used without waiting for the entire sequence to be generated. Let’s write something extremely simple—a method that returns a collection with two items, the numbers 1 and 2. The following listing shows what it looks like without yield return.

Listing 2.3 Using a list

private IEnumerable<int> NoYieldDemo()

{

var result = new List<int>();

result.Add(1);

result.Add(2);

return result;

}

public void UseNoYieldDemo()

{

foreach(var current in NoYieldDemo())

{

Console.WriteLine($"Got {current}");

}

}

Unsurprisingly, this code will output two lines, Got 1 and Got 2. The following listing shows the same functionality with yield return.

Listing 2.4 Using yield return

private IEnumerable<int> YieldDemo()

{

yield return 1;

yield return 2;

}

public void UseYieldDemo()

{

foreach(var current in YieldDemo())

{

Console.WriteLine($"Got {current}");

}

}

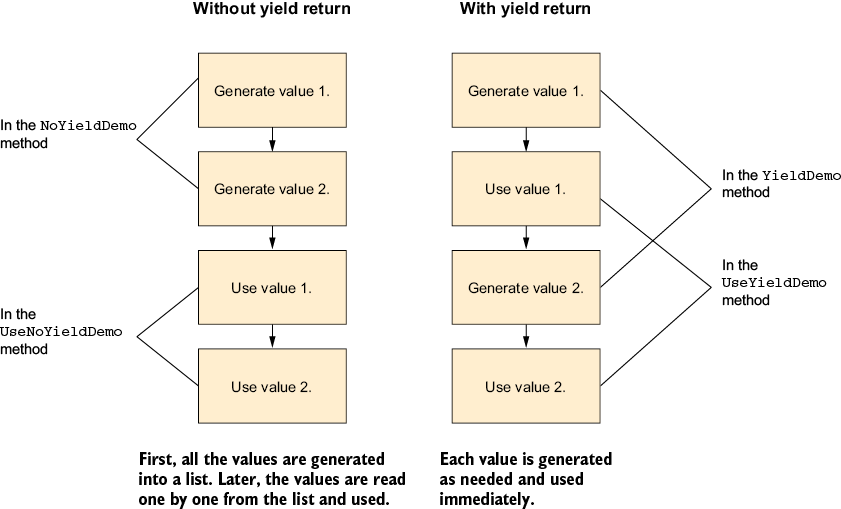

The code looks very similar, and the results are the same. So what is the big difference? In the first example, all the values were generated first and then used, while in the second example, each value was generated just when it was needed, as illustrated in figure 2.1.

Figure 2.1 Using a collection versus using yield return

In the non-yield return version, the code ran normally. The NoYieldDemo method started, did some stuff, and then returned. However, the YieldDemo method behaved differently—it suspended at startup, and then, every time a value was needed, it resumed, ran the minimal amount of code to provide the next value (until the next yield return), and suspended itself again. But .NET doesn’t have a way to suspend and resume code. What kind of sorcery is that?

Obviously, there is no sorcery, as magic does not exist in computer science. Just like in the case of the lambda function examples we’ve seen before, the compiler just rewrote our code.

In computer science, code that can be suspended, resumed, and potentially return multiple values is called a coroutine. In C#, it is called iterator methods in relation to yield return and async methods in relation to async/await. This book uses the C# terminology.

The IEnumerable<T> interface that I used as the return type for the YieldDemo method is the most basic interface for anything that can be treated as collections or sequences of items (including everything you can use foreach to iterate over). Every generic collection in .NET implements this interface (older collections classes, from before generics were introduced in .NET 2.0, use the nongeneric IEnumerable interface instead). This interface has just one method that returns an IEnumerator<T>, and this enumerator does all the work. An enumerator can do two things: return the current value and move to the next one.

The IEnumerator<T> interface is important because it lets us (and the compiler) write code that handles a sequence of items without knowing anything about that sequence. Every collection in .NET implements IEnumerable<T>, so constructs that deal with sequences (like the foreach loop) don’t need to know how to work with every type of collection—they just need to know how to work with IEnumerable<T>. The inverse is also true—everything that implements IEnumerable<T> is automatically a sequence of items that can be used with foreach loops and all the other relevant parts of .NET and C#.

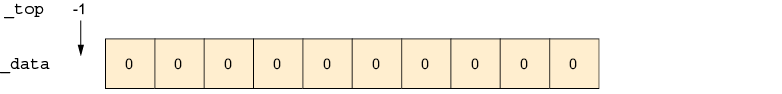

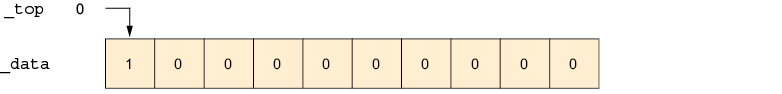

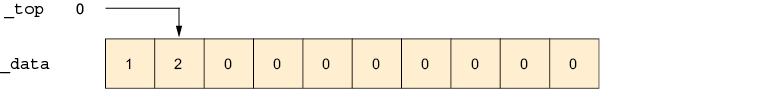

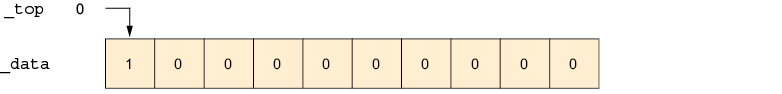

Just like in the lambda example, the compiler rewrote the YieldDemo method into a class, but this time, a class that implements IEnumerator<int>, so the foreach loop knows what to do with it. Let’s rewrite the code ourselves to get the same result.

To begin, YieldDemo returned an IEnumerable<int>, so obviously, we have a class that implements this interface, so it can be returned from YieldDemo. Like I said before, the only thing the IEnumerable<int> does is provide an IEnumerator<int> (for historical reasons, to be compatible with code written before .NET 2.0, in addition to IEnumerator<int>, we also need to provide a nongeneric IEnumerator, and we will use the same class for both):

public class YieldDemo_Enumerable : IEnumerable<int> ❶ { public IEnumerator<int> GetEnumerator() { return new YieldDemo_Enumerator(); ❷ } IEnumerator IEnumerable.GetEnumerator() { return new YieldDemo_Enumerator(); ❷ } }

❶ Our IEnumerable<int>

❷ Returns an IEnumerator<int>

Now we need to write our IEnumerator<int> that will do all the work:

public class YieldDemo_Enumerator : IEnumerator<int>

{

We need a Current property to hold the current value:

public int Current { get; private set; }

Now comes the important part. Here, we divide our original code into chunks, breaking it just after each yield return, and replace the yield return with Current =:

private void Step0()

{

Current = 1;

}

private void Step1()

{

Current = 2;

}

The next part is the MoveNext method. This method runs the correct chunk from the previous paragraph to update the Current property. It uses the _step field to remember which step to run, and when we run out of steps, it returns false to indicate we are done (if you have a computer science background, you may recognize this as a simple implementation of a finite state machine):

private int _step = 0; ❶ public bool MoveNext() { switch(_step) { case 0: Step0(); ++_step; break; case 1: Step1(); ++_step; break; case 2: return false; ❷ } return true; }

❶ A variable to keep track of where we are

❷ We’re done; return false.

Now there’s some necessary technical stuff not relevant to this example:

object IEnumerator.Current => Current;

public void Dispose() { }

public void Reset() { }

}

And finally, wrap the classes we generated in a method so we can call it:

public IEnumerable<int> YieldDemo()

{

return new YieldDemo_Enumerable();

}

The actual compiler-generated code is longer and more complicated, mostly because I completely ignored all the possible error conditions. However, conceptually, this is what the compiler does. The compiler rewrote our code into chunks and called each chunk in turn when needed, giving us an illusion of code that suspends and resumes.

For the yield return feature to work, we need

-

The code transformation that divided our code into chunks and simulated a single method that can be suspended and resumed

-

A standard representation for anything collection-like (

IEnumerable<T>) so that everyone can use the results of this transformation

That brings us directly to async/await and the Task class in the next chapter.

Summary

-

The C# compiler will rearrange and rewrite your code to add features that do not exist in .NET.

-

For lambda functions, the compiler moves code into a new method and shared data into a new class.

-

For

yield return, the compiler also divides your code into chunks and wraps them in a class that runs the correct chunk at the correct time to simulate a function that can be suspended and resumed.

3 The async and await keywords

- Using

TaskandTask<T>to check whether an operation has completed - Using

TaskandTask<T>to notify your code when the operation has completed - Using

TaskandTask<T>in synchronous code - How

async/awaitworks

In the previous chapter, we saw how the compiler can transform our code to add language features. In this chapter, we’ll learn how it applies to async/await.

async/await is a feature that lets us write asynchronous code as if it were normal synchronous code. With asynchronous programming, when we perform an operation that would normally make the CPU wait (usually for data to arrive from some device—for example, reading a file), instead of waiting, we just do something else. Making asynchronous code look like normal code is kind of a big deal because traditionally, you had to divide each sequence of operations into small parts (breaking at each asynchronous operation) and call the right part at the right time. Unsurprisingly, this makes the code confusing to write.

3.1 Asynchronous code complexity

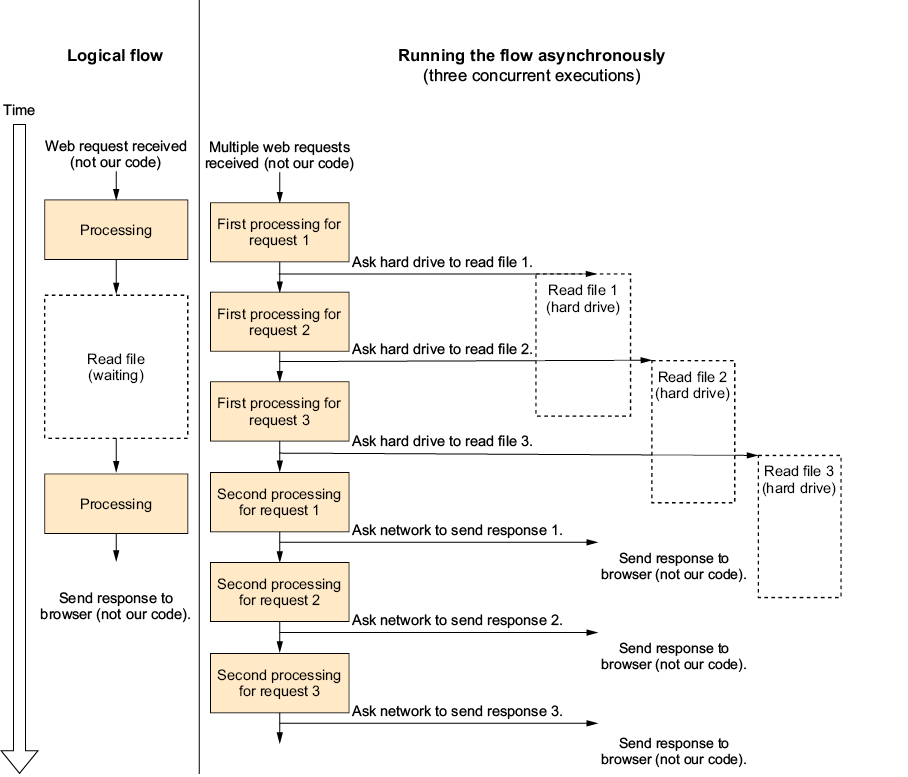

To demonstrate this, I placed figures 1.1 and 1.4 side by side (figure 3.1).

Figure 3.1 Logical flow versus code running asynchronously

Clearly, the left side describing the logical flow is simple, linear, and easy to understand, while the right side that describes how the asynchronous version is running is none of those things (it’s also very difficult to debug).

Traditionally, asynchronous programming requires us to design and write our code for the right diagram, as well as divide our code into chunks that do not represent the logical flow of the code. Also, we need code to manage the whole mess and decide what to run when.

The async/await feature lets us write code that describes the logical flow, and the compiler will transform it to something that can run asynchronously automatically—it lets us write our code as shown on the left side of the diagram and have it run like the right side.

Let’s illustrate this through a simple example—a method that reads the image width (in pixels) of a BMP image file. I’ve chosen BMP because unlike more modern image file formats, all the data in the BMP file is at a fixed location, which makes it easy to extract. We’ll read the image width in two steps:

-

First, we check whether the file is a BMP image file at all. We do that by looking at the beginning of the file: BMP image files start with “BM.”

-

We will then jump to the eighteenth byte in the file where the width is stored as a 32-bit (4 bytes) integer.

Our method will return the image width in pixels or throw an exception if the file is not a BMP image and if there are other errors. Because we haven’t talked about how to write asynchronous code yet, the first version of this example will be simple, old-style, synchronous code.

Listing 3.1 Reading BMP width, non-asynchronous version

int GetBitmapWidth(string path)

{

using (var file = new FileStream(path, FileMode.Open, FileAccess.Read))

{

var fileId = new byte[2]; ❶

var read = file.Read(fileId, 0, 2); ❶

if (read != 2 || fileId[0] != 'B' || fileId[1] != 'M') ❶

throw new Exception("Not a BMP file"); ❶

file.Seek(18, SeekOrigin.Begin); ❷

var widthBuffer = new byte[4]; ❷

read = file.Read(widthBuffer, 0, 4); ❷

if(read != 4) throw new Exception("Not a BMP file"); ❷

return BitConverter.ToInt32(widthBuffer, 0); ❷

}

}

❶ The file should start with “BM.”

❷ Reads the width from byte 18

As you can see, the code is straightforward. We read the first two bytes and check whether their value is “BM.” Next, we skip to the eighteenth byte and read the image width.

3.2 Introducing Task and Task<T>

Now let’s make this code asynchronous. We have two excellent reasons for doing so:

-

The first and most important reason is that this is a book about asynchronous programming.

-

The second reason is that the main thing our code does is read a file, and reading a file is a blocking operation that will make our thread wait for data to arrive from the hard disk. That means we can improve efficiency by using our thread to do other stuff while waiting instead of making the operating system switch to another thread (or another process entirely).

The main thing our method does is read a file using the Stream.Read method, and luckily, there’s an asynchronous version of the Stream.Read method called Stream.ReadAsync. Let’s take a look at the difference in the method signature between those two methods:

public int Read(byte[] buffer, int offset, int count); public Task<int> ReadAsync(byte[] buffer, int offset, int count, CancellationToken cancellationToken);

We can see the following two differences in the method signature:

-

While

Readreturns anint,ReadAsyncreturnsTask<int>.TheTaskandTask<T>classes are an important part of modern asynchronous programming in C#, and we will explore their usage here. -

ReadAsyncalso accepts aCancellationToken, but we’re going to ignore it for now because there’s an entire chapter about it later in this book.

Earlier in this chapter, I wrote that for asynchronous code, we need to divide our code into parts, and we also need a system to manage the execution of those parts. Task is the class that we use to interact with that system. A Task does multiple things: it represents an ongoing asynchronous operation, lets us schedule code to run when an asynchronous operation ends (we’ll talk about these two in this chapter), and lets us create and compose asynchronous operations (we’ll talk about those later in this book).

Chapter 2 introduced us to IEnumerable<T> and how it enables yield return. The Task and Task<T> classes are the IEnumerable<T> of async programming. They are a standard way to represent the async stuff, so everyone knows how to work with it.

The name of the Task class is confusing; the word “task” implies there’s an operation, something that runs, but this is not the only meaning of Task. A Task represents an event that may happen in the future, while Task<T> represents a value that may be available in the future. Those events and values may or may not be the results of something we will describe using the English word task. In computer science, those concepts are often called future, promise, or deferred value, but in this book, we’ll refer to them using the .NET/C# term Task.

It’s important to note that while it is common to create a Task or a Task<T> for code we run in the background (as we’ll see in the next chapter), some classes and methods in .NET use the word task to refer to this code or to manage context information related to it. The Task or Task<T> objects themselves do not let you manage the background operation and do not carry context related to it. A Task just lets you know when that background operation finishes running (the Task object represents the event of the background operation ending), and Task<T> adds the ability to get the result of the background operation (Task<T> represents the value produced by the background operation). A Task is not a thread or a background operation, but it is sometimes used to convey the results of a background operation.

In .NET/C# terminology, we say that the task is completed when the event represented by a Task happens or the value represented by a Task<T> is available. The Task is also considered completed if it is marked as canceled or faulted.

For example, when we call Task.Delay(1000), we get an object that represents an event that will happen in 1 second but has no corresponding thread or activity. In the same way, if we call File.ReadAllBytesAsync, and, for example, there is no thread reading in the background, the system asks the disk controller (a different hardware device than the CPU) to load data and calls us when it’s done, so we get back a Task<byte[]> object that represents the data that will be received from the disk in the future.

The Read method we used in our example fills the buffer we gave it and returns the number of bytes that were successfully read. For compatibility and performance reasons, the ReadAsync method works in the same way, except it returns a Task<int> instead of an int. The returned Task<int> represents the number of bytes successfully read that will be available after the operation completes. Note that we should not touch the buffer we passed ReadAsync until the operation is complete.

So a Task or Task<T> object represents an event or a value that may be available in the future. When we want to know whether this event happened or the value is available yet, there are two asynchronous approaches supported by Task and Task<T>—to use a travel metaphor, there are the “Are we there yet” model and the “Wake me up when we arrive” model. Furthermore, there is also the synchronous approach if you can’t or don’t want to use asynchronous programming.

3.2.1 Are we there yet?

In the “Are we there yet” model, you are responsible for asking the Task whether it has completed yet, usually in a loop that does other things between those checks (this is called polling), which is done by reading the IsCompleted property. Note that IsCompleted is true even if the task has errored out or was canceled.

Task also has a Status property we can use. The task has completed if Status is RanToCompletion, Canceled, or Faulted. Using the IsCompleted property is better than using the Status property because checking one condition as opposed to three is more concise and less error-prone (we will talk about canceled and faulted tasks later in this book).

You should not check IsCompleted or Status in a loop unless you are doing other work between the checks. If most of what you do is just waiting for the task to complete, you are not only using up a thread for waiting, completely negating the advantages of asynchronous techniques, but you are also wasting CPU cycles, thus wasting resources that other code on the computer (including the work you are waiting for) could utilize for useful stuff.

This is just like asking “Are we there yet?” in a car. If you do it too often, you are interfering with what everyone else in the car is doing and might even arrive later if you annoy the driver.

Here’s an example of using IsCompleted in a loop to check whether the task has completed:

var readCompleted = File.ReadAllBytesAsync("example.bin");

while(!readCompleted.IsCompleted)

{

UpdateCounter();

}

var bytes = readCompleted.Result;

// do something with bytes

In this example, the program needs to continuously update a counter while waiting for the data to arrive from the disk. So it updates the counter and checks whether the read has completed in a loop. When the data is available, it exits the loop to process the data it just received.

Most of the time, we don’t have anything useful to do while waiting for IsCompleted to become true, so this model is rarely used. In most cases (and most of this book), we will let the .NET runtime schedule and run our tasks and will not use the “Are we there yet” model. This is only beneficial when we have something to do while waiting and don’t want to return and release the thread for some reason (we will see an example with UI threads later in this book).

3.2.2 Wake me up when we get there

In the “Wake me up when we get there” model, you pass a callback method to the task, and it will call you when it’s complete (or errored out or canceled). This is done by passing the callback to the ContinueWith method.

The task is passed as a parameter to the callback, so you can use it to check whether the operation completed successfully and, in the case of Task<T>, read the result value:

var readCompleted = File.ReadAllBytesAsync("example.bin");

readCompleted.ContinueWith( t =>

{

if(t.IsCompletedSuccessfully)

{

byte[] bytes = t.Result;

// do something with bytes

}

});

Unlike the previous model, this fits the needs of our example code very well. If we take a look at just the code immediately around the first Read call, it changes from

var fileId = new byte[2]; var read = file.Read(fileId, 0, 2); if (read != 2 || fileId[0] != 'B' || fileId[1] != 'M') …

to

var fileId = new byte[2];

var read = file.ReadAsync(fileId, 0, 2, CancellationToken.None).

ContinueWith(t=>

{

if (t.Result != 2 || fileId[0] != 'B' || fileId[1] != 'M')

…

In this case, we only replaced Read with ReadAsync and passed all the code that was after the Read call into ContinueWith as a lambda function (doing some more required changes if we use using or throw, but fortunately, it doesn’t affect the three lines of code in this snippet—we’ll talk about it later in this chapter).

Technically speaking, you can make multiple asynchronous calls by chaining ContinueWith calls with lambdas, as shown in the example, although this tends to be unreadable and creates extremely long lines of code. For example, reading 3 bytes from a file 1 byte at a time will look like this:

f.ReadAsync(buffer, 0, 1, CancellationToken.None).

ContinueWith( t1 =>

{

f.ReadAsync(buffer, 1, 1, CancellationToken.None).

ContinueWith( t1 =>

{

f.ReadAsync(buffer, 2, 1, CancellationToken.None).

ContinueWith( t1 =>

{

// finished readin 3 bytes!

});

});

});

The code isn’t very readable, and each ContinueWith pushes our code farther to the right. If I wanted to change this example to read 4 or more bytes in the same way, it wouldn’t fit within the width of the book’s page. (Spoiler: Later in this chapter, we’ll see how async/await solves this problem.)

3.2.3 The synchronous option

There is also the possibility that you will want to wait for a task in a non-asynchronous way. For example, if you write old fashion synchronous code that uses an API that only has a Task-based asynchronous method, the best way is to call the Task.Wait method or read the Task<T>.Result property. The Wait method and Result property will block the current thread until the task is complete and will throw an exception if the task is canceled or errored out, making it behave like synchronous code. Note that using the Wait method or the Result property to wait for a task to complete is inefficient and negates the advantages of using asynchronous programming in the first place. It also might cause deadlocks in some scenarios (deadlocks make your program become stuck, and we will talk about them extensively later in the book):

var readCompleted = File.ReadAllBytesAsync("example.bin");

var bytes = readCompleted.Result; ❶

// do something with bytes

❶ This will wait until the read has completed.

Generally, you would only use this approach when you had no other choice (mostly when integrating asynchronous and non-asynchronous code).

3.2.4 After the task has completed

After the task is completed, you need to check whether it completed successfully or not; both Task and Task<T> have the IsFaulted, IsCanceled, and IsCompletedSuccessfully properties that do exactly what their name suggests. They can be used after the task is complete to check the status of the task. (It’s okay to call them before the task completes; in that case, they just return false.) If IsFaulted is true, you can read the Exception property to see what went wrong.

In case the task is faulted, the easiest way to throw the error stored in a task so you can handle it with a normal try-catch block is to call Wait. Calling Wait after the task has completed is safe and will not block the thread (because the event it is waiting for has already happened). It will just return immediately if the task completed successfully or throw an exception if the task was canceled or has errored out. Because of this behavior, you don’t even have to check that the task is in a faulted or canceled state (it will throw an exception if the task was completed unsuccessfully).

So if you want to check whether the task has errored out and check the exception object without throwing, you would use

if(task.IsFaulted) HandleError(task.Exception);

However, if you want to check whether the task has errored out and throw the exception only after the task has completed, you could just use

task.Wait();

This works because, like we said, calling Task.Wait when the task has already completed will either do nothing and return immediately or throw an exception. Note that the last two code snippets behave differently if the task was canceled (there is an entire chapter about cancellation later in the book).

The exception in the Task.Exception property (or the exception thrown by the Wait method or Result property if the task is in a faulted state) will be an AggregateException. The AggregateException will contain the original exception in its InnerExceptions (plural) property, which should not be confused with the InnerException (singular) property that is inherited from Exception and is not used in this case. AggregateException is used here to support situations where the task represents the combination of several operations.

If you know there is just one exception, and you want to access it and not the AggregateException, you use something like

If(task.IsFaulted) HandleError(task.Exception.InnerExceptions[0]);

Task<T> (but not Task) also has a Result property that is used to get the value stored in the task. Typically, we will only read the Result property after the task has completed (IsCompleted is true or ContinueWith is called). If we try to read the Result property before the task is completed, the Result property will block and wait until the task is completed. This is equivalent to calling Wait and has all the same inefficiencies and dangers we talked about. If the task is in an error or canceled state, then reading Result will throw an exception.

To summarize, when using tasks without async/await, you can use the IsCompleted or Status properties to ask “Are we there yet?” And just like in a car, you don’t want to ask too often. You can use ContinueWith to make the task call you when it completes (“Wake me up when we arrive”). Finally, you can call Wait or Result to make the task synchronous, but that’s inefficient and dangerous because it will block the thread until the task is complete (calling Wait or Result after the task has completed is perfectly efficient and safe because the result is already available, and there’s no need for blocking).

Now that we understand how Task and Task<T> work, let’s see how async/await makes it easier to use.

3.3 How does async/await work?

We’ve already seen that Task and Task<T> are all we need to write asynchronous code, but writing any nontrivial code using ContinueWith and lambdas (like in the “Wake me up when we get there” example) gets tedious and unreadable pretty quickly. Let’s copy just the part that reads the file from our “get BMP width” example and convert it to use ReadAsync and ContinueWith.

We will do the simplest mechanical conversion possible. Every time there is a call to Read, we will replace it with a call to ReadAsync and just pass the rest of the code as a lambda function to ContinueWith:

file.ReadAsync(fileId, 0, 2,CancellationToken.None).

ContinueWith(firstReadTask =>

{

int read = firstReadTask.Result;

if (read != 2 || fileId[0] != 'B' || fileId[1] != 'M')

{

// get error to caller somehow

}

file.Seek(18, SeekOrigin.Begin);

var widthBuffer = new byte[4];

file.ReadAsync(widthBuffer, 0, 4, CancellationToken.None).

ContinueWith(secondReadTask =>

{

read = secondReadTask.Result;

if(read != 4) throw new Exception("Not a BMP file");

var result = BitConverter.ToInt32(widthBuffer, 0);

// get result back to our caller somehow

});

});

What a mess! What was a simple and readable method looks awful now. It is less readable because the code is divided by the async calls and no longer follows the logic of our algorithm. And worst of all, our conversion isn’t even correct! The original code had a using statement that disposed of the file on completion and on exception, so to get the same behavior, we have to wrap everything in try-catch blocks and do it ourselves (I didn’t add those to the code because it’s difficult to read even without it). We also need to get the exception and results to the caller, and because the lambdas are running asynchronously, we can no longer use return and throw to communicate with the caller of the method. Fortunately, we have async/await that takes care of this for us.

To rewrite our example with async/await and ReadAsync, we need to make the following changes:

-

First, we start by marking our method with the

asynckeyword, and as we’ll see a bit later, this by itself does nothing. -

We can no longer return an

intbecause as an asynchronous method, our method will return immediately and complete its work later. It’s not possible to return anintbecause we don’t know the correct value at the time the method returns! Fortunately, we do have a way to return “anintthat will be available in the future”—Task<int>. -

And finally, insert the

awaitkeyword before everyReadAsynccall. Theawaitkeyword tells the compiler that the code needs to be suspended at this point and resumed when whateverasyncoperation you are waiting for completes.

The following listing shows our method with async/await. Changes from the original non-async version are in bold.

Listing 3.2 Reading the BMP width (async version)

public async Task<int> GetBitmapWidth(string path)

{

using (var file = new FileStream(path, FileMode.Open, FileAccess.Read))

{

var fileId = new byte[2];

var read = await file.ReadAsync(fileId, 0, 2);

if (read != 2 || fileId[0] != 'B' || fileId[1] != 'M')

throw new Exception("Not a BMP file");

file.Seek(18, SeekOrigin.Begin);

var widthBuffer = new byte[4];

read = await file.ReadAsync(widthBuffer, 0, 4);

if(read != 4) throw new Exception("Not a BMP file");

return BitConverter.ToInt32(widthBuffer, 0);

}

}

It looks basically the same as the original non-async version, only with the async and await keywords added, but it’s actually very different. Let’s see what the code really does.

Note that the code in listing 3.3 describes what the compiler does conceptually. The actual code generated by the compiler is very different and much more complex. I’m using this simplified version because it is easier to understand while giving a good mental model of what the compiler does. At the end of this section, I’ll talk about the major differences between my version and the actual compiler code.

asynch/await uses the “Wake me up when we arrive” model. It breaks the code into chunks (like the yield return feature from the previous chapter) and uses the task’s ContinueWith method to run the chunks at the correct time.

Let’s see how the compiler rewrites our code. But before exploring what the compiler does, we’ll make just one tiny change: in the async/await example, we returned Task<int>, but we didn’t talk about how you can create a Task yet (don’t worry, there is a whole chapter about it later). Instead, we’re going to pass two callbacks to our method: setResult, which will be called when our code completes successfully, and setException, which will be called in case we get an exception.

What the compiler does is separate the code after an await into a different method (like we did with yield return in the previous chapter) and pass it to the Task’s ContinueWith method. To be able to share variables between the methods, we will move the local variables into a class like we did with lambda functions.

Listing 3.3 Reading the BMP width (async with ContinueWith only)

public void GetBitmapWidth(string path,

Action<int> setResult, Action<Exception> setException)

{

var data = new ClassForGetBitmapWidth();

data.setResult = setResult;

data.setException = setException;

data.file = new FileStream(path, FileMode.Open, FileAccess.Read); ❶

try

{

data.fileId = new byte[2]; ❶

var read = data.file.ReadAsync(data.fileId, 0, 2). ❶

ContinueWith(data.GetBitmapWidthStep2); ❶

}

catch(Exception ex) ❷

{ ❷

data.file.Dispose(); ❷

setException(ex); ❷

} ❷

}

❶ Code from listing 3.2

❷ Code added to simulate the using statement

This took care of the code before the first await. Note that our changes didn’t make this part run asynchronously at all. Everything before the first await runs like normal non-async code. And if you have a method marked with the async keyword without an await, then the entire method will run as if it weren’t an async method (except that the return value will be wrapped in a Task).

We had to replace the using statement with try-catch to make sure the file is disposed properly on exception (not try-finally because, if this part of the code succeeds, we need to keep the file open until the next part finishes).

Now for the class that we need to store the “local” variables, we use

private class ClassForGetBitmapWidth

{

public Stream file;

public byte[] fileId;

public byte[] widthBuffer;

public Action<int> setResult;

public Action<Exception> setException;

In this class, the code between the first and second await is

public void GetBitmapWidthStep2(Task<int> task)

{

try

{

var read = task.Result; ❶

if (read != 2 || fileId[0] != 'B' || fileId[1] != 'M') ❶

throw new Exception("Not a BMP file"); ❶

file.Seek(18, SeekOrigin.Begin); ❶

widthBuffer = new byte[4]; ❶

file.ReadAsync(widthBuffer, 0, 4). ❶

ContinueWith(GetBitmapWidthStep3);

}

catch(Exception ex) ❷

{ ❷

file.Dispose(); ❷

setException(ex); ❷

} ❷

}

❶ Code from listing 3.2

❷ Code added to simulate the using statement

It looks like we didn’t check the result of the previous operation. We didn’t read the Task IsCompletedSuccessfully property or the Task.Status property. Thus, we don’t know if there was an error. However, reading Task.Result will throw an exception if the task was completed unsuccessfully, so writing code to explicitly check for errors is not required. Also note that because this was called from ContinueWith, we know the task has already completed, and we are guaranteed the task is completed and reading Result is a nice, safe, and fast nonblocking operation.

Now for the part after the last await, we have

public void GetBitmapWidthStep3(Task<int> task)

{

try

{

var read = task.Result; ❶

if(read != 4) throw new Exception("Not a BMP file"); ❶

file.Dispose(); ❶

var result = BitConverter.ToInt32(widthBuffer, 0); ❶

setResult(result); ❷

}

catch(Exception ex) ❸

{ ❸

file.Dispose(); ❸

setException(ex); ❸

} ❸

}

}

❶ Code from listing 3.2

❷ Instead of a return statement

❸ Code added to simulate the using statement

Just like we’ve seen with yield return in chapter 2, the compiler divided our function into chunks and added code to call them at the correct time. We’ve also seen that the correct time for the first chunk, before the first await, is when the method was called. Marking the method as async does not make it asynchronous. It’s just a compiler flag to tell the compiler to look for await keywords and divide the method into chunks. In the same way, await does not wait—it actually ends the current chunk and returns control to the caller.

As promised, here are the major differences between the code we just talked about and the code the compiler really generates:

-

The compiler does not divide your code into different methods. It builds a single state machine method that keeps track of the current position using a variable and uses a big switch statement to run the correct piece of code.

-

The compiler does not use

ContinueWith; instead, it uses an internal object called an awaiter. I’ve chosen to useContinueWithbecause it’s conceptually similar, and unless you are writing a compiler or a replacement of the .NET asynchronous framework, you don’t need to know about it. -

awaitactually does much more thanContinueWith.ContinueWithjust makes the callback run when theTaskis complete, while the former has other useful features that we will talk about later in this book.

3.4 async void methods