Chapter 1 Introduction to Design Patterns

Introduction

简介

One of the problems in understanding and using design patterns is the need for proper insight into software architecture and the reason for using design patterns. When this insight does not exist, design patterns will increase complexity. As they are not used in their proper place, the use of design patterns will be considered a waste of work. The reason for this is that the design patterns will not be able to have a good impact on quality because they need to be placed in the right place.

理解和使用设计模式的问题之一是需要正确了解软件体系结构以及使用设计模式的原因。当这种洞察力不存在时,设计模式将增加复杂性。由于它们没有在适当的位置使用,因此使用设计模式将被视为浪费工作。这样做的原因是,设计模式无法对质量产生好的影响,因为它们需要放在正确的位置。

In this chapter, an attempt has been made to briefly examine the software architecture and design patterns. The enterprise applications architecture has been introduced, and the relationship between software design problems and design patterns has been clarified. In the rest of the chapter, a brief look at .NET, some object-oriented principles, and the UML is given because, throughout the book, UML is used for modeling, and the .NET framework and C# language are used for sample codes.

在本章中,我们尝试简要地研究了软件体系结构和设计模式。介绍了企业应用程序体系结构,并阐明了软件设计问题和设计模式之间的关系。在本章的其余部分,简要介绍了 .NET、一些面向对象的原则以及 UML,因为在整本书中,UML 用于建模,而 .NET 框架和 C# 语言用于示例代码。

Structure

结构

In this chapter, we will cover the following topics:

在本章中,我们将介绍以下主题:

- What is software architecture

- What are design patterns

- GoF design patterns

- Enterprise application and its design patterns

-

- Different types of enterprise applications

- Design patterns and software design problems

-

- Effective factors in choosing a design pattern

- .NET

-

- Introduction to object orientation in .NET

- Object orientation SOLID principles

- UML class diagram

- Conclusion

Objectives

目标

By the end of this chapter, you will be able to understand the role and place of design patterns in software design, be familiar with software architecture, and evaluate software design problems from different aspects. You are also expected to have a good view of SOLID design principles at the end of this chapter and get to know .NET and UML.

通过本章的结尾,您将能够理解设计模式在软件设计中的作用和地位,熟悉软件架构,并从不同方面评估软件设计问题。在本章的末尾,您还应该对 SOLID 设计原则有一个很好的了解,并了解 .NET 和 UML。

What is software architecture

什么是软件架构

Today, there are various definitions for software architecture. The system’s basic structure, related to design decisions, must be made in the initial steps of software production. The common feature in all these definitions is their importance. Regardless of our attitude towards software architecture, we must always consider that suitable architecture can be developed and maintained. Also, when we want to look at the software from an architectural point of view, we must know what elements and items are of great importance and always try to keep those important elements and items in the best condition.

今天,软件架构有多种定义。系统的基本结构与设计决策相关,必须在软件生产的初始步骤中制定。所有这些定义的共同特征是它们的重要性。无论我们对软件架构的态度如何,我们都必须始终考虑可以开发和维护合适的架构。此外,当我们想从架构的角度来看软件时,我们必须知道哪些元素和项目非常重要,并始终尝试使这些重要的元素和项目处于最佳状态。

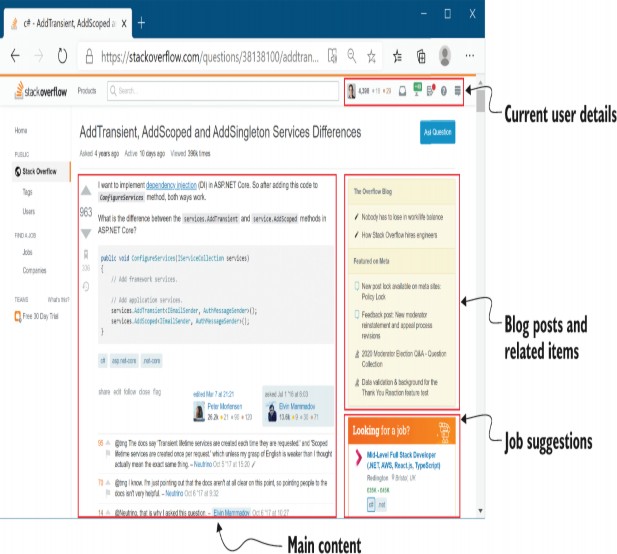

Consider software that needs to be better designed, and its essential elements must be identified. During the production and maintenance of this software, we will need help with various problems, including implementing changes, which will reduce the speed of providing new features and increase the volume of software errors and bugs. For example, pay attention to the following figure:

考虑需要更好设计的软件,并且必须确定其基本元素。在该软件的制作和维护过程中,我们将需要帮助解决各种问题,包括实施更改,这将降低提供新功能的速度并增加软件错误和错误的数量。例如,请注意下图:

Figure 1.1: An example of software without proper architecture

图 1.1: 没有适当架构的软件示例

In the preceding figure, full cells are the new features provided, and empty cells are the design and architectural problems and defects.

在上图中,full cells 是提供的新功能,而 empty cells 是设计和体系结构问题和缺陷。

If we consider one row of Figure 1.1, the following figure will be seen:

如果我们考虑图 1.1 的一行,将看到下图:

Figure 1.2: Sample feature delivery in software without proper architecture

图 1.2: 没有适当架构的软件中的功能交付示例

We see how much time it takes to provide three different features. If the correct design and architecture were adopted, new features would be delivered more quickly. The same row could be presented as the following figure:

我们了解提供三种不同功能需要多少时间。如果采用正确的设计和架构,新功能将更快地交付。同一行可以显示为下图:

Figure 1.3: Sample feature delivery in software WITH proper architecture

图 1.3: 具有适当架构的软件中的示例功能交付

The difference in length in the preceding two forms (Figure 1.2 and Figure 1.3) is significant. This shows the importance of the right design and architecture in the software. A high-quality infrastructure in the short term may indicate that production speed decreases. This natural and high-quality infrastructure will show its effect in the long run.

前两种形式(图 1.2 和图 1.3)的长度差异很大。这表明了软件中正确设计和架构的重要性。短期内高质量的基础设施可能表明生产速度会降低。从长远来看,这种天然和高质量的基础设施将显示出其效果。

The following figure shows the relationship between Time and Output:

下图展示了 Time 和 Output 之间的关系:

Figure 1.4: Time-Output Relation in Software Delivery

图 1.4: 软件交付中的时间-输出关系

In Figure 1.4, at the beginning of the work, reaching the output with a low-quality Infrastructure is faster than with a high-quality Infrastructure. However, with the passage of time and the increase in the capabilities and complexity of the software, the ability to maintain and apply software change is accelerated with better quality infrastructure. This will reduce costs, increase user satisfaction, and improve maintenance.

在图 1.4 中,在工作开始时,使用低质量的 Infrastructure 比使用高质量的 Infrastructure 更快地达到输出。但是,随着时间的推移以及软件功能和复杂性的增加,维护和应用软件更改的能力会随着更高质量的基础设施而加速。这将降低成本、提高用户满意度并改善维护。

In this regard, Gerald Weinberg, the late American computer science scientist, has a quote that says,

在这方面,已故的美国计算机科学科学家杰拉尔德·温伯格 (Gerald Weinberg) 有一句话说:

“If builders-built buildings the way programmers wrote programs, then the first woodpecker that came along would destroy civilization.”

“如果建筑商按照程序员编写程序的方式建造建筑物,那么出现的第一只啄木鸟就会摧毁文明。”

Weinberg tried to express the importance of infrastructure and software architecture. According to Weinberg’s quote, paying attention to maintainability in the design and implementation of software solutions is important. Today, various principles can be useful in reaching a suitable infrastructure.

Weinberg 试图表达基础设施和软件架构的重要性。根据 Weinberg 的引述,在软件解决方案的设计和实施中注意可维护性很重要。今天,各种原则都有助于实现合适的基础设施。

Some of these principles are as follows:

其中一些原则如下:

-

Separation of concerns: Different software parts should be separated from each other according to their work.

关注点分离:不同的软件部分应根据其工作情况相互分离。 -

Encapsulation: This is a way to restrict the direct access to some components of an object, so users cannot access state values for all the variables of a particular object. Encapsulation can hide data members, functions, or methods associated with an instantiated class or object. Users will have no idea how classes are implemented or stored, and the users will only know that the values are being passed and initialized (Data Hiding). Also, it would be easy to change and adapt to new requirements (ease of use) using Encapsulation.

封装:这是一种限制对对象某些组件的直接访问的方法,因此用户无法访问特定对象的所有变量的状态值。封装可以隐藏与实例化的类或对象关联的数据成员、函数或方法。用户将不知道类是如何实现或存储的,用户只知道值正在传递和初始化(数据隐藏)。此外,使用 Capsulation 很容易更改和适应新的需求(易用性)。 -

Dependency inversion: High-level modules should not depend on low-level modules, and the dependence between these two should only happen through abstractions. To clarify the issue, consider the following example:

We have two different times in software production: compile and run time. Suppose that in a dependency graph at compile-time, the following relationship exists between classes A, B, and C:

依赖反转:高级模块不应该依赖低级模块,这两者之间的依赖关系只能通过抽象来实现。为了澄清这个问题,请考虑以下示例:

我们在软件生产中有两个不同的时间:编译和运行时。假设在编译时的依赖关系图中,类 A、B 和 C 之间存在以下关系:

Figure 1.5: Relationship between A, B, and C in compile-time

图 1.5: 编译时 A、B 和 C 之间的关系

As you can see, at compile-time, A is directly connected to B to call a method in B, and the exact relationship is true for the relationship between B and C. This connection will be established in the same way during runtime as follows:

如你所见,在编译时,A 直接连接到 B 以调用 B 中的方法,并且 B 和 C 之间的关系正是如此。此连接将在运行时以相同的方式建立,如下所示:

Figure 1.6: Relationship between A, B, and C in runtime

图 1.6.. 运行时 A、B 和 C 之间的关系

The problem in this type of communication is that there is no loose coupling between A-B and B-C, and these parts are highly dependent on each other and cause problems in maintainability. To solve this problem, instead of the direct connection between A and B, we consider the connection at compile-time based on abstractions as shown in the following figure:

这种类型的通信的问题在于 A-B 和 B-C 之间没有松散的耦合,并且这些部分彼此高度依赖,并导致可维护性问题。为了解决这个问题,我们在编译时根据抽象来考虑连接,而不是 A 和 B 之间的直接连接,如下图所示:

Figure 1.7: Relationship between A, B, and C based on abstractions

图 1.7: 基于抽象的 A、B 和 C 之间的关系

In the prior connection, A depends on an abstraction from B at the compile-time, and B has implemented the corresponding abstraction. This change in communication type will ultimately remain the same as at runtime. But it will cause a loose coupling in the sense that the implementation of B can be changed without changing A.

在前面的连接中,A 依赖于编译时来自 B 的抽象,并且 B 已经实现了相应的抽象。通信类型的这种更改最终将与运行时相同。但它会导致松散耦合,因为 B 的实现可以在不改变 A 的情况下改变。

The communication during runtime in the prior mode is shown in the following figure:

previous 模式下运行时的通信如下图所示:

Figure 1.8: Relationship between A, B, and C based on abstractions in runtime

图 1.8: 运行时中基于抽象的 A、B 和 C 之间的关系

-

Explicit dependencies: Classes and methods must be honest with their users. For example, attribute X must have a correct value for a class to function properly. This condition can be applied through the class constructor, and objects that cannot be used can be prevented from being created.

显式依赖项:类和方法必须对其用户诚实。例如,属性 X 必须具有正确的值,类才能正常工作。可以通过类构造函数应用此条件,并且可以阻止创建无法使用的对象。 -

Single responsibility: This principle is proposed in object-oriented design as one of the architectural principles. This principle is like the separation of concerns and states that an object must have one task and reason for the change.

单一责任:此原则在面向对象设计中作为架构原则之一提出。此原则类似于关注点分离,并声明对象必须具有一个更改的任务和原因。 -

DRY: The behavior related to a specific concept should not be given in several places. Failure to comply with this principle will cause all the code to change its behavior, increasing the probability of errors and bugs.

DRY:与特定概念相关的行为不应在多个地方给出。不遵守此原则将导致所有代码改变其行为,从而增加错误和错误的可能性。 -

Persistence ignorance: Different business models in data sources should be able to store regardless of the type. These models are often called Plain Old CLR Object (POCO)s in .NET. This is because the storage resource can change over time (for example, from SQL Server to Azure Cosmos DB), and this should not affect the rest of the sections. Some signs of violation of this principle can be introduced as the following:

持久性无知:数据源中的不同业务模型应该能够存储,而不管类型如何。这些模型在 .NET 中通常称为普通旧 CLR 对象 (POCO)。这是因为存储资源可能会随时间而变化(例如,从 SQL Server 更改为 Azure Cosmos DB),这不应影响其余部分。违反此原则的一些迹象可以引入如下:Binding to a specific parent class

绑定到特定的父类The requirement to implement a specific interface

实现特定接口的要求Requiring the class to store itself (as in Active Record)

要求类存储自身(如 Active Record 中)The presence of mandatory parametric constructors

存在强制性参数构造函数The presence of virtual features in the class

类中存在虚拟特征The presence of unique attributes related to storage technology

存在与存储技术相关的独特属性The preceding cases are introduced as violations of the principle of persistence ignorance because these cases often create a dependency between models and storage technology, making it difficult to adapt to new storage technology in the future.

上述情况违反了持久化无知原则,因为这些情况通常会在模型和存储技术之间产生依赖关系,使其将来难以适应新的存储技术。 -

Bounded contexts: A more significant problem can be divided into smaller conceptual sub-problems. In other words, each sub-problem represents a context that is independent of other contexts. Communication between different contexts is established through programming interfaces. Any communication or data source shared between contexts should be avoided, as it will cause tight coupling between contexts.

有界上下文:更重要的问题可以划分为更小的概念子问题。换句话说,每个子问题都代表一个独立于其他上下文的上下文。不同上下文之间的通信是通过编程接口建立的。应避免在上下文之间共享任何通信或数据源,因为这会导致上下文之间紧密耦合。

What are design patterns

什么是设计模式

As can be seen from the title of the word "design pattern", it is simply a pattern that can be used to solve an upcoming problem. This means that the completed design is not a finished design that can be directly converted into source code or machine code. During the design and production of software, we face various problems in design and implementation, which are repetitive. Therefore, the answer to these often has a fixed format. For example, developing a feature to send messages to the end user may be necessary for software production. Therefore, a suitable infrastructure must be designed and implemented for this requirement. On the other hand, there are different ways to send messages to end users, such as via email, SMS, and so on. The mentioned problem has fixed generalities in most software, and the answer often has a fixed design and format.

从“设计模式”这个词的标题可以看出,它只是一种可以用来解决即将到来的问题的模式。这意味着完成的设计不是可以直接转换为源代码或机器代码的已完成设计。在软件的设计和生产过程中,我们在设计和实现中面临各种问题,这些问题是重复的。因此,这些问题的答案通常具有固定的格式。例如,开发一项功能以向最终用户发送消息对于软件生产可能是必要的。因此,必须针对此要求设计和实施合适的基础设施。另一方面,有多种方法可以向最终用户发送消息,例如通过电子邮件、SMS 等。上述问题在大多数软件中具有固定的通用性,而答案通常具有固定的设计和格式。

A design pattern is a general, repeatable solution to common problems in software design. Therefore, if we encounter a new issue during software production, there may be no pattern introduced for that, and we need to solve it without the help of existing practices. This needs to be solved by designing a correct structure.

设计模式是针对软件设计中常见问题的通用、可重复的解决方案。因此,如果我们在软件生产过程中遇到新的问题,可能没有引入任何模式,我们需要在没有现有实践帮助的情况下解决它。这需要通过设计正确的结构来解决。

Using design patterns has several advantages:

使用设计模式有几个优点:

-

Increasing scalability

提高可扩展性 -

Increasing expandability

提高可扩展性 -

Increased flexibility

提高灵活性 -

Increase the speed of development

提高开发速度 -

Reduce errors and problems

减少错误和问题 -

Reducing the amount of coding

减少编码量

The important thing about design patterns is that they are not a part of the architecture of software systems, and they only provide the correct method of object-oriented coding. You can choose and implement the right way to solve a problem.

设计模式的重要一点是,它们不是软件系统架构的一部分,它们只提供正确的面向对象编码方法。您可以选择并实施正确的方法来解决问题。

GoF design patterns

GoF 设计模式

In the past years, Christopher Alexander introduced the design pattern. He was an architect and used patterns to build buildings. This attitude and thinking of Alexander made Eric Gama use design patterns to develop and produce software in his doctoral dissertation. After a short period, Chard Helm started working with Eric Gama. Later, John Vlissides and Ralph Johnson also joined this group. The initial idea was to publish the design patterns as an article, and due to its length, the full text was published as a book. This four-person group, which is also called Gang of Four (GoF), published a book called “Elements of Reusable Object-Oriented Software”, and they classified and presented 23 different design patterns in the form of 3 different categories (structural, behavioral, and creational). They tried to categorize it from the user's perspective. To fully present this, the GoF group developed a general structure to introduce the design patterns, which consisted of the following sections:

在过去的几年里,Christopher Alexander 引入了设计模式。他是一名建筑师,使用图案来建造建筑物。Alexander 的这种态度和思考使 Eric Gama 在他的博士论文中使用设计模式来开发和生产软件。不久之后,Chard Helm 开始与 Eric Gama 合作。后来,John Vlissides 和 Ralph Johnson 也加入了这个团体。最初的想法是将设计模式作为一篇文章发布,由于它的长度,全文被作为一本书发布。这个四人小组,也被称为 Gang of Four (GoF),出版了一本名为《Elements of Reusable Object-Oriented Software》的书,他们以 3 个不同类别(结构、行为和创建)的形式分类和呈现了 23 种不同的设计模式。他们试图从用户的角度对其进行分类。为了充分呈现这一点,GoF 小组开发了一个通用结构来介绍设计模式,其中包括以下部分:

-

Name and Classification: It shows the design pattern's name and specifies each design pattern's category.

Name and Classification:它显示设计模式的名称并指定每个设计模式的类别。 -

Also Known As: If other names know the design pattern, they are introduced in this section.

也称为:如果其他名称知道设计模式,则本节将介绍它们。 -

Intent: This section gives brief explanations about the design pattern.

Intent:本节提供有关设计模式的简要说明。 -

Motivation, Structure, Implementation, and Sample Code: A description of the problem, main structure, implementation steps, and the source code of design patterns are presented.

动机、结构、实现和示例代码:提供了问题描述、主要结构、实现步骤和设计模式的源代码。 -

Participants: This section introduces and describes different participants (in terms of classes and objects involved) in the design pattern.

参与者:本节介绍和描述设计模式中的不同参与者(根据涉及的类和对象)。 -

Notes: Significant points are given in this section regarding the design and implementation of each design pattern.

注意:本节中给出了有关每个设计模式的设计和实现的重要要点。 -

Consequences: Advantages and disadvantages of the discussed design pattern are given.

结果:给出了所讨论的设计模式的优缺点。 -

Applicability: Places, where the discussed design pattern can be helpful, are briefly stated.

适用性:简要说明所讨论的设计模式可能有用的位置。 -

Related Patterns: The relationship of each design pattern with other design patterns is mentioned.

Related Patterns:提到了每种设计模式与其他设计模式的关系。

The 23 presented patterns can be divided in the form of the following table in terms of scope (whether the pattern is applied to the class or its objects) and purpose (what the pattern does):

提供的 23 种模式可以按范围(模式是应用于类还是其对象)和目的(模式的作用)以下表的形式进行划分:

| Behavioral | Structural | Creational | |

|---|---|---|---|

| Class | Interpreter、Template Method | Class Adapter | Factory Method |

| Object | Chain of Responsibility、Command、Iterator、Mediator、Memento、Observer、State、Strategy、Visitor | Object Adapter、Bridge、Composite、Decorator、Façade、Flyweight、Proxy | Abstract Factory、Builder Prototype、Singleton |

Table 1.1: Classification of GoF Design Patterns

表 1.1:GoF 设计模式的分类

Every design pattern has four essential features as follows:

每个设计模式都有四个基本特征,如下所示:

-

Name: Every template must have a name. The name of the design pattern should be such that the application, problem, or solution provided can be reached from the name of the design pattern.

名称:每个模板都必须有一个名称。设计模式的名称应使所提供的应用程序、问题或解决方案可以从设计模式的名称中访问。 -

Problem: The problem indicates how the design pattern can be applied.

问题:该问题指示如何应用设计模式。 -

Solution: It deals with the expression of the solution, the involved elements, and their relationships.

解决方案:它处理解决方案的表达式、涉及的元素及其关系。 -

Consequences: It expresses the results, advantages, disadvantages, and effects of using the design pattern.

后果:它表示使用设计模式的结果、优点、缺点和效果。

The relationship of all these 23 patterns can be seen in the following figure:

Figure 1.9: Relationships of GoF Design Patterns

图 1.9: GoF 设计模式的关系

The design patterns provided by GoF are not the only design patterns available. Martin Fowler has also introduced a series of other design patterns with a different look at software production problems called Patterns of Enterprise Application Architecture (PofEAA). He tried to introduce suitable solutions for everyday problems in producing enterprise software. Although there is a rea meter and criteria for using design patterns, a small software may need to use PofEAA design patterns. Martin Fowler has also divided the provided design patterns into different categories, which include the following:

GoF 提供的设计模式并不是唯一可用的设计模式。Martin Fowler 还引入了一系列其他设计模式,这些模式对软件生产问题有着不同的看法,称为企业应用程序架构模式 (PofEAA)。他试图为生产企业软件中的日常问题引入合适的解决方案。尽管使用设计模式有严格的标准和标准,但小型软件可能需要使用 PofEAA 设计模式。Martin Fowler 还将提供的设计模式分为不同的类别,其中包括:

- Domain-logic patterns

域逻辑模式 - Data-source architectural patterns

数据源架构模式 - Object-relational behavioral patterns

对象关系行为模式 - Object-relational structural patterns

对象关系结构模式 - Object-relational metadata-mapping patterns

对象关系元数据映射模式 - Web presentation patterns

Web 表示模式 - Distribution patterns

分布模式 - Offline concurrency patterns

脱机并发模式 - Session-state patterns

会话状态模式 - Base patterns

基本模式

In this chapter, an attempt has been made to explain GoF and PofEAA design patterns with a simple approach, along with practical examples.

在本章中,我们尝试用简单的方法解释 GoF 和 PofEAA 设计模式,并提供了实际示例。

Enterprise application and its design patterns

企业应用程序及其设计模式

People construct types of different applications. Each of these has its challenges and complexities. For example, in one software, concurrency issues may be significant and critical, and in another category, the complexity of data structures might be necessary. The term enterprise application or information systems refers to systems in which we face the complexity of data processing and storage. To implement this software, special design patterns will be needed to manage business logic and data. It is important to understand that a series of design patterns can be useful for different types of software. However, a series will also be more suitable for enterprise applications.

人们构建不同类型的应用程序。每一项都有其挑战和复杂性。例如,在一个软件中,并发问题可能是重大和关键的,而在另一个类别中,数据结构的复杂性可能是必要的。术语企业应用程序或信息系统是指我们面临数据处理和存储复杂性的系统。要实现此软件,需要特殊的设计模式来管理业务逻辑和数据。了解一系列设计模式对于不同类型的软件非常有用,这一点很重要。但是,系列也将更适合企业应用程序。

Among the most famous enterprise applications, we can mention accounting software, toll payment, insurance, customer service, and so on. On the other hand, software such as text processors, operating systems, compilers, and even computer games are not part of the enterprise application category.

在最著名的企业应用程序中,我们可以提到会计软件、通行费支付、保险、客户服务等。另一方面,文本处理器、作系统、编译器甚至计算机游戏等软件不属于企业应用程序类别。

The important characteristic of enterprise applications is the durability of data. This data may be stored in data sources for years. The reason for the durability of these data will be needed at different times in different parts of the program at different steps of the process. During the lifetime of the data, we may encounter small and significant changes in operating systems, hardware, and compilers. The volume of data we face in an enterprise application is often large, and different databases will often be needed for storing them.

企业应用程序的重要特征是数据的持久性。此数据可能会在数据源中存储数年。这些数据持久性的原因将在程序的不同时间、流程的不同步骤中需要。在数据的生命周期内,我们可能会遇到作系统、硬件和编译器的微小而重大的变化。我们在企业应用程序中面临的数据量通常很大,并且通常需要不同的数据库来存储这些数据。

When we have a lot of data and have to present it to the users, graphic interfaces and different pages will be needed. The users who use these pages are different from each other and have different knowledge levels of software and computers. Therefore, we will use different methods and procedures to provide users with better data.

当我们有大量数据并且必须将其呈现给用户时,将需要图形界面和不同的页面。使用这些页面的用户彼此不同,并且对软件和计算机的知识水平不同。因此,我们将使用不同的方法和程序为用户提供更好的数据。

Enterprise application often needs to communicate with other software. Each software may have its technology stack. However, we face different interaction, communication, and software integration methods. Even at the level of business analysis, each software may have different analyses for a specific entity, leading to the emergence of different data structures. From another point of view, business logic can be complex, and it is very important to organize these effectively and change them over time.

企业应用程序通常需要与其他软件通信。每个软件都可能有其技术堆栈。但是,我们面临着不同的交互、通信和软件集成方法。即使在业务分析层面,每个软件也可能对特定实体有不同的分析,从而导致出现不同的数据结构。从另一个角度来看,业务逻辑可能很复杂,有效地组织这些逻辑并随着时间的推移改变它们非常重要。

When the word enterprise application is used, a mentality arises that we are dealing with a big software. In reality, this is not correct. A small software can create more value than a large software for the end user. One of the ways to deal with a big problem is to break and divide it into smaller problems. When these smaller issues are solved, they will lead to the solution of the bigger problem. This principle is also true about large software.

当使用企业应用程序这个词时,就会产生一种心态,即我们正在处理一个大型软件。实际上,这是不正确的。小型软件可以比大型软件为最终用户创造更多价值。处理大问题的方法之一是将其分解并划分为较小的问题。当这些较小的问题得到解决时,它们将导致更大问题的解决。此原则也适用于大型软件。

Different types of enterprise applications

不同类型的企业应用程序

It should always be kept in mind that every enterprise application has its own challenges and complexities. Therefore, one solution can be generalized for types of enterprise applications. Consider the following two examples:

应始终牢记,每个企业应用程序都有自己的挑战和复杂性。因此,对于企业应用程序类型,可以通用化一种解决方案。请考虑以下两个示例:

Example 1: In an online selling software, we face many concurrent users. In this case, the proposed solution should have good scalability in addition to the effective use of resources so that with the help of hardware enhancement, the volume of incoming requests can increase the volume of supported concurrent users. In this type of software, the end user can efficiently work with it, so it will be necessary to design a web application that can run on most browsers.

示例 1:在在线销售软件中,我们面临许多并发用户。在这种情况下,除了有效利用资源外,所提出的解决方案还应具有良好的可扩展性,以便在硬件增强的帮助下,传入请求的数量可以增加支持的并发用户的数量。在这种类型的软件中,最终用户可以有效地使用它,因此有必要设计一个可以在大多数浏览器上运行的 Web 应用程序。

Example 2: We may face software in which the volume of concurrent users is low, but the complexity of the business is high. For these systems, more complex graphical interfaces will be needed, which is necessary to manage more complex transactions.

示例 2:我们可能遇到并发用户量较低但业务复杂性较高的软件。对于这些系统,将需要更复杂的图形界面,这对于管理更复杂的事务是必要的。

As evident in the preceding two examples, having a fixed architectural design for every type of enterprise software will not be possible. As mentioned before, the choice of architecture depends on the precise understanding of the problem.

从前面的两个例子中可以明显看出,不可能为每种类型的企业软件都采用固定的架构设计。如前所述,架构的选择取决于对问题的精确理解。

One of the important points in dealing with enterprise applications and their architecture is to pay attention to efficiency, which can be different among teams. One team may pay attention to the performance issues from the beginning, and another may prefer to produce the software first and then identify and fix performance issues by monitoring various metrics. At the same time, a team might use a combination of these two methods. Whichever method is used to improve performance, the following factors are usually important to address:

处理企业应用程序及其架构的重要一点是关注效率,这可能因团队而异。一个团队可能从一开始就关注性能问题,而另一个团队可能更愿意先生产软件,然后通过监控各种指标来识别和修复性能问题。同时,团队可能会结合使用这两种方法。无论使用哪种方法提高性能,通常都需要解决以下因素:

-

Response time: The time it takes to process a request and return the appropriate response to the user.

响应时间:处理请求并将适当的响应返回给用户所需的时间。 -

Responsiveness: For example, suppose the user is uploading a file. The response rate will be better if the user can work with the software during the upload operation. Another mode is that the user has to wait while performing the upload operation. In this case, the response rate will be equal to the time rate.

响应能力:例如,假设用户正在上传文件。如果用户可以在上传作期间使用该软件,则响应率会更好。另一种模式是用户在执行上传作时必须等待。在这种情况下,响应率将等于时间率。 -

Latency: The minimum time it takes to receive any response. For example, suppose we are connected to another system through Remote Desktop. The time it takes for the appropriate request and response to move through the network and reach us will indicate the delay rate.

Latency(延迟):接收任何响应所需的最短时间。例如,假设我们通过 Remote Desktop 连接到另一个系统。适当的请求和响应通过网络到达我们所需的时间将指示延迟率。 -

Throughput: It specifies the amount of work that can be done in a certain period. For example, when copying a file, the throughput can be set based on the number of bytes copied per second. Metrics such as the number of transactions per second or TPS can also be used for enterprise applications.

吞吐量:指定在一定时间内可以完成的工作量。例如,在复制文件时,可以根据每秒复制的字节数设置吞吐量。每秒事务数或 TPS 等指标也可用于企业应用程序。 -

Load: Specifies the amount of pressure on the system. For example, the number of online users can indicate Load. The load is often an important factor in setting up other factors. For example, the response time for ten users may be 1 second, and for 20 users, it may be 5 seconds.

负载:指定系统上的压力大小。例如,在线用户数可以指示 Load (负载)。负载通常是设置其他因素的重要因素。例如,10 个用户的响应时间可能是 1 秒,而 20 个用户的响应时间可能是 5 秒。 -

- Load sensitivity: A proposition through which the change of response time based on load is specified. For example, assume that system A has a response time of 1 second for several 10-20 users. System B also has a response time of 0.5 seconds for ten users, while if the number of users becomes 20, its response time increases to 2 seconds. In this case, A has less load sensitivity than B.

负载敏感度:一个命题,通过该命题指定基于负载的响应时间变化。例如,假设系统 A 对几个 10-20 个用户的响应时间为 1 秒。系统 B 对 10 个用户的响应时间也是 0.5 秒,而如果用户数量变为 20 个,则其响应时间将增加到 2 秒。在这种情况下,A 的负载敏感度低于 B。

- Load sensitivity: A proposition through which the change of response time based on load is specified. For example, assume that system A has a response time of 1 second for several 10-20 users. System B also has a response time of 0.5 seconds for ten users, while if the number of users becomes 20, its response time increases to 2 seconds. In this case, A has less load sensitivity than B.

-

Efficiency: Performance divided by resources. A system with a TPS volume equal to 40 on 2 CPU cores has better efficiency than a system that brings a TPS volume equal to 50 with 6 CPU cores.

效率:性能除以资源。在 2 个 CPU 内核上 TPS 卷等于 40 的系统比在 6 个 CPU 内核上将 TPS 卷等于 50 的系统效率更高。 -

Capacity of system: A measure that shows the maximum operating power or the maximum effective load that can be tolerated.

系统容量:显示可以承受的最大运行功率或最大有效负载的度量。 -

Scalability: A measure that shows how efficiency is affected by increasing resources. Often, two vertical (Scale Up) and horizontal (Scale Out) methods are used for scalability.

可扩展性:显示增加资源如何影响效率的度量。通常,使用两种垂直 (纵向扩展) 和水平 (横向扩展) 方法来实现可扩展性。

The critical point is that design decisions will not necessarily have similar effects on different efficiency factors. Usually, when producing enterprise applications, an effort is made to give higher priority to scalability. Because it can have a more significant effect on efficiency and will be easier to implement. In some situations, a team may prefer to increase the volume rate by implementing a series of complex tasks so they do not have to bear the high costs of purchasing hardware.

关键是,设计决策不一定会对不同的效率因素产生类似的影响。通常,在生成企业应用程序时,会努力提高可伸缩性的优先级。因为它可以对效率产生更显着的影响,并且更容易实施。在某些情况下,团队可能更愿意通过实施一系列复杂的任务来提高卷率,这样他们就不必承担购买硬件的高成本。

The PofEAA presented in this book is inspired by the patterns presented in the Patterns of Enterprise Applications Architecture book written by Martin Fowler. The following structure is used in presenting PofEAA patterns:

本书中介绍的 PofEAA 受到 Martin Fowler 撰写的 Patterns of Enterprise Applications Architecture 一书中介绍的模式的启发。以下结构用于呈现 PofEAA 模式:

-

Name and Classification: It shows the design pattern's name and specifies each design pattern's category.

Name and Classification:它显示设计模式的名称并指定每个设计模式的类别。 -

Also Known As: If the design pattern is known by other names, they are introduced in this section.

也称为:如果设计模式有其他名称,则本节将介绍它们。 -

Intent: In this section, brief explanations about the design pattern are given.

意图:本节简要介绍了设计模式。 -

Motivation, Structure, Implementation, and Sample Code: A description of the problem, main structure, implementation steps, and the source code of design patterns are presented.

动机、结构、实现和示例代码:提供了问题描述、主要结构、实现步骤和设计模式的源代码。 -

Notes: Regarding the design and implementation of each design pattern, significant points are given in this section.

注意:关于每种设计模式的设计和实现,本节中给出了重要的要点。 -

Consequences: Advantages and disadvantages of the discussed design pattern are given.

结果:给出了所讨论的设计模式的优缺点。 -

Applicability: Places, where the discussed design pattern can be helpful, are briefly stated.

适用性:简要说明所讨论的设计模式可能有用的位置。 -

Related Patterns: The relationship of each design pattern with other design patterns is mentioned.

相关模式:提到了每种设计模式与其他设计模式的关系。

Design patterns and software design problems

设计模式和软件设计问题

When we talk about software design, we are talking about the plan, map, or structural layout on which the software is supposed to be placed. During a software production process, various design problems need to be identified and resolved. This behavior exists in the surrounding world and in real life. For example, when we try to present a solution, it is in line with a specific problem. The same point of view is also valid in the software production process. As mentioned earlier, in a software production process, design patterns solve many different problems. In order to identify and apply a suitable design pattern and a working method for a problem, it is necessary to determine the relationship between the design patterns and the upcoming software problem in the first step. In order to better understand this relationship, you can pay attention to the following:

当我们谈论软件设计时,我们谈论的是应该放置软件的计划、地图或结构布局。在软件生产过程中,需要识别和解决各种设计问题。这种行为存在于周围的世界和现实生活中。例如,当我们尝试提出解决方案时,它与特定问题一致。同样的观点也适用于软件生产过程。如前所述,在软件生产过程中,设计模式解决了许多不同的问题。为了识别并应用适合问题的设计模式和工作方法,有必要在第一步中确定设计模式与即将到来的软件问题之间的关系。为了更好地理解这种关系,您可以注意以下几点:

1.

Finding the right objects: In the world of object-oriented programming, there are many different objects. Each contains a set of data and performs certain tasks. The things that the object can do are called the behavior of the object or its methods. In order to change the content of the data that the object carries, it is necessary to act through methods. One of the most important and most difficult parts of designing and implementing an object-oriented program is decomposing a system into a set of objects. This is difficult because this analysis requires the boundaries of encapsulation, granularity, dependence, flexibility, efficiency, and so on.

查找正确的对象:在面向对象编程的世界中,有许多不同的对象。每个 VPN 都包含一组数据并执行某些任务。对象可以执行的作称为对象的行为或其方法。为了更改对象携带的数据内容,必须通过方法进行作。设计和实现面向对象的程序最重要和最困难的部分之一是将系统分解为一组对象。这很困难,因为这种分析需要封装、粒度、依赖性、灵活性、效率等界限。

When a problem arises, there are different ways to transform the problem into an object-oriented design. One of the ways is to pay attention to the structure of the sentences, convert the nouns into classes, and present the verbs in the form of methods. For example, in the phrase:

当出现问题时,有多种方法可以将问题转换为面向对象的设计。其中一种方法是注意句子的结构,将名词转换为类,并以方法的形式呈现动词。例如,在短语中:

"A user can log in to the system by entering the username and password."

“用户可以通过输入用户名和密码来登录系统。”

"User" has the role of the noun in the sentence, and "login" is the verb of the sentence. Therefore, you can create a class called User, which has a method called Login as the following output:

“User” 在句子中具有名词的角色,“login” 是句子的动词。因此,您可以创建一个名为 User 的类,该类具有一个名为 Login 的方法,输出如下:

public class User {

public void Login(/*Inputs*/) {}

}Another way is to pay attention to the connections, tasks, and interactions and thereby identify the classes, methods, and so on. No matter what method is used, at the end of the design, we may encounter classes for which we need help finding an equivalent in the real world or business environment. Design patterns help in abstractions, and classes can be placed in their proper place and used. For example, the class used to implement the sorting algorithm may not be identified in the early stages of analysis and design, but different design patterns can be designed correctly and connected with the rest of the system.

另一种方法是关注连接、任务和交互,从而识别类、方法等。无论使用哪种方法,在设计结束时,我们都可能会遇到需要帮助在现实世界或业务环境中找到等效项的类。设计模式有助于抽象,并且可以将类放置在适当的位置并使用。例如,在分析和设计的早期阶段可能无法识别用于实现排序算法的类,但可以正确设计不同的设计模式并与系统的其余部分连接。

2.

Recognizing the granularity of objects: An object has a structure and can be accompanied by various details, and the depth of these details can be very high or low. This factor can affect the size and the number of objects. It is an important decision to decide what boundaries and limits the object structure should have. Design patterns can help form these boundaries and limits accurately.

识别对象的颗粒度:一个对象有一个结构,可以伴随着各种细节,这些细节的深度可以很高,也可以很低。此因素会影响对象的大小和数量。决定对象结构应具有哪些边界和限制是一个重要的决定。设计模式可以帮助准确地形成这些边界和限制。

3.

Knowing the interface of objects: The behavior of an object consists of the name, input parameters, and output type. These three components together form the signature of a behavior. The set of signatures provided by an object is called a connection or interface of the object. The object interface specifies under what conditions and in what ways a request can be sent to the object. These interfaces are required to communicate with an object, although having information about these does not mean having information about how to implement them. Being able to connect a request to the appropriate object and appropriate behavior at the time of execution is called dynamic binding.

了解对象的接口:对象的行为由名称、输入参数和输出类型组成。这三个组件共同构成了行为的特征。对象提供的签名集称为对象的连接或接口。对象接口指定在什么条件下以及以什么方式可以向对象发送请求。这些接口是与对象通信所必需的,尽管拥有有关这些接口的信息并不意味着拥有有关如何实现它们的信息。能够在执行时将请求连接到适当的对象和适当的行为称为动态绑定。

public class Sample {

public int GetAge(string name){}

public int GetAge(string nationalNo, string name){}

}Mentioning a request at the time of coding does not mean connecting the request for implementation. This connection will happen at the time of execution, which expresses its dynamic binding. This provides the ability to replace objects with each other at runtime. This is called polymorphism in object orientation. Design patterns also help in shaping such communications and interactions. This design pattern assistance may happen, for example, by placing a constraint on the structure of classes.

在编码时提及请求并不意味着连接 request 以进行实现。这个连接将在执行时发生,这表示它的动态绑定。这提供了在运行时将对象相互替换的功能。这在面向对象中称为多态性。设计模式还有助于塑造此类通信和交互。例如,通过对类的结构施加约束,可以实现这种设计模式帮助。

4.

Knowing how to implement objects: Objects are created by instantiating from a class which leads to the allocation of memory to the internal data of the object. New classes can also be created as a subset or child of a class using inheritance. In this case, the child class will contain all the accessible data and behaviors of its parent class. If the definition of a class is necessary to leave the implementation of behavior to the children (abstract behavior), then the class can be defined as an abstract class. Since this class is only an abstraction, it cannot be instantiated. If a class is not abstract, then it is called a real or intrinsic class.

知道如何实现对象:对象是通过从类实例化来创建的,这会导致将内存分配给对象的内部数据。还可以使用继承将新类创建为类的子集或子类。在这种情况下,子类将包含其父类的所有可访问数据和行为。如果类的定义是必要的,以便将行为的实现留给子类(抽象行为),则可以将该类定义为抽象类。由于此类只是一个抽象,因此无法实例化。如果一个类不是抽象的,那么它被称为实类或内部类。

public abstract class Sample {}// Abstract class 抽象类

public class Sample {}// Intrinsic class 内部类

public abstract class Sample {

public abstract void Get() ;//Abstract method 抽象方法

}How the objects are instantiated, and classes are formed and implemented are very important points that should be paid attention to. Several design patterns are useful in these situations. For example, one design pattern may help to create static implementations for classes, and another design pattern may help define static structure.

如何实例化对象,如何形成和实现类是应该注意的非常重要的点。在这些情况下,有几种设计模式很有用。例如,一种设计模式可能有助于为类创建静态实现,而另一种设计模式可能有助于定义静态结构。

5.

Development based on interfaces: With the help of inheritance, a class can access the accessible behavior and data of the parent class and reuse them. Being able to reuse an implementation and having a group of objects with a similar structure are two different stories, which is very important and shows its importance in polymorphism. This usually happens with the help of abstract classes or interfaces.

基于接口的开发:在继承的帮助下,类可以访问父类的可访问行为和数据并重用它们。能够重用一个实现和拥有一组具有相似结构的对象是两个不同的故事,这非常重要,并显示了它在多态性中的重要性。这通常是在抽象类或接口的帮助下发生的。

The use of abstract classes and interfaces makes the user unaware of the exact type of object used in the class. Because the object adheres to the provided abstraction and interface. Also, users are unaware of the classes that implement these objects and only know the abstraction that created the class. This makes it possible to write code based on interfaces and abstractions.

使用抽象类和接口会使用户不知道类中使用的对象的确切类型。因为对象遵循提供的抽象和接口。此外,用户不知道实现这些对象的类,而只知道创建该类的抽象。这使得基于接口和抽象编写代码成为可能。

The main purpose of creational design patterns is to provide different ways to communicate between interfaces and implementations. This category of design patterns tries to provide this communication in an inconspicuous way at the time of instantiating.

创建性设计模式的主要目的是提供不同的方式来在接口和实现之间进行通信。此类别的设计模式尝试在实例化时以不显眼的方式提供此通信。

6.

Attention to reuse: Another important problem in software design and implementation is to benefit from reusability and provide appropriate flexibility to the codes. For example, you should pay attention to the differences between inheritance and composition and use each one in the right place. These two are one of the most widely used methods to provide code reusability. Using inheritance, one class can be implemented based on another class. Reusability, in this case, is formed in the form of a child class definition. This type of reuse is called White Box Reuse:

注意重用:软件设计和实现中的另一个重要问题是从可重用性中受益,并为代码提供适当的灵活性。例如,您应该注意 inheritance 和 composition 之间的区别,并在正确的地方使用它们。这两种是提供代码可重用性的最广泛使用的方法之一。使用继承,一个类可以基于另一个类实现。在这种情况下,可重用性以子类定义的形式形成。这种类型的重用称为 White Box Reuse:

public class Parent {

public void Show_Parent(){}

}

public class Child: Parent { // Inheritance

public void Show_Child(){}

}On the other hand, Composition provides reusability by installing an object in a class and adding new functionality in that class. This type of reuse is also called Black Box Reuse:

另一方面,Composition 通过在类中安装对象并在该类中添加新功能来提供可重用性。这种类型的重用也称为黑盒重用:

public class Engine {

public void Get(){}

}

public class Car {

private Engine _engine;

public Car(Enging engine)=>_engine = engine;//Composition

}Both inheritance and composition structures have advantages and disadvantages that should be considered while using them. However, empirically, most programmers overuse inheritance in order to provide reusability, and this causes problems in code development. Using composition can be very helpful in many scenarios. By using delegation, you can give double power to composition. Today, there are other ways that help to reach a code with suitable reusability. For example, in a language like C#, there is a feature called Generic, which can be very useful in this direction. Generics are also called parametrized types. With all these explanations, a series of design patterns help to provide reusability and flexibility well in the code.

继承结构和组合结构都有优点和缺点,使用它们时应考虑这些优点和缺点。但是,从经验上讲,大多数程序员过度使用继承以提供可重用性,这会导致代码开发出现问题。在许多情况下,使用组合可能非常有用。通过使用委派,您可以为组合提供双倍的能力。今天,还有其他方法可以帮助获得具有适当可重用性的代码。例如,在像 C# 这样的语言中,有一个叫做 Generic 的功能,它在这个方向上可能非常有用。泛型也称为参数化类型。通过所有这些解释,一系列设计模式有助于在代码中很好地提供可重用性和灵活性。

6.

Design for change: It is a suitable and good design that can predict future changes and is not vulnerable to those changes. If the design cannot make a good prediction of the future, it should be ready to apply extensive changes in the future. One of the functions and advantages of design patterns is that it allows the design to be flexible to future changes.

为变化而设计:这是一种合适且良好的设计,可以预测未来的变化,并且不会受到这些变化的影响。如果设计不能对未来做出良好的预测,它应该准备好在未来应用广泛的更改。设计模式的功能和优点之一是它允许设计灵活地适应未来的变化。

Effective factors in choosing a design pattern

选择设计模式的有效因素

When first faced with a list of 23 GoF design patterns, it can be difficult to know which pattern to choose for a particular problem. This difficulty increases when we add the PofEAA design patterns to this list of 23 design patterns. It is enough to make the selection process difficult and confusing. In order to make a suitable choice, it is recommended to consider the following points:

当第一次面对 23 个 GoF 设计模式的列表时,可能很难知道为特定问题选择哪种模式。当我们将 PofEAA 设计模式添加到这个 23 种设计模式列表中时,这种难度会增加。这足以使选择过程变得困难和混乱。为了做出合适的选择,建议考虑以下几点:

-

Understanding the problem space and how the design pattern can solve the problem: The first step in choosing a design pattern is to identify the problem correctly. Once the problem becomes clear, think about how the presence of the design pattern can help the problem.

了解问题空间以及设计模式如何解决问题:选择设计模式的第一步是正确识别问题。一旦问题变得清晰,就想想设计模式的存在如何帮助解决问题。 -

Examining the generalities of design patterns using the purpose and scope: By doing this review, you can understand the degree of compatibility of the problem ahead with the design patterns.

使用目的和范围检查设计模式的通用性:通过进行此审查,您可以了解问题与设计模式的兼容性程度。 -

Examining the interconnections of design patterns: For example, if the Abstract Factory design pattern is to be used by combining Singleton with this pattern, only one instance of Abstract Factory can be created. In order to apply dynamics to it, a Prototype can be used.

检查设计模式的互连:例如,如果要通过将 Singleton 与此模式组合来使用 Abstract Factory 设计模式,则只能创建一个 Abstract Factory 实例。为了对其应用动力学,可以使用 Prototype。 -

Examining the similarities and differences of each design pattern: For example, if the problem ahead is a behavioral problem, you can choose the appropriate behavioral pattern among all the behavioral patterns.

检查每种设计模式的相似之处和不同之处:例如,如果前面的问题是行为问题,则可以在所有行为模式中选择合适的行为模式。 -

Knowing the reasons that lead to redesign: In this step, the factors that can cause redesign should be known.

了解导致重新设计的原因: 在此步骤中,应了解可能导致重新设计的因素。 -

Knowing the design variables: In this step, you should understand what can be changed in the design.

了解设计变量:在此步骤中,您应该了解设计中可以更改的内容。

When the appropriate design pattern is chosen, it should be implemented. In order to use and implement a design pattern, you must first study that pattern completely. In this study, the application cases and consequences of the model should be carefully studied and examined. After understanding the generalities of the pattern, the details should be examined, and these details ensure that we know the elements involved have sufficient information about the interactions between these elements.

当选择了适当的设计模式时,应该实现它。为了使用和实现设计模式,您必须首先完整地研究该模式。在本研究中,应仔细研究和检查该模型的应用案例和后果。在了解了模式的一般性之后,应该检查细节,这些细节确保我们知道所涉及的元素有足够的信息来了解这些元素之间的交互。

In the next step, the way to implement the design pattern will be examined by the existing code samples. Then, we will select the appropriate names for each of the involved elements, taking into account the problem and the business ahead. The choice of name should be made according to the purpose of each element in the upcoming business. After choosing the name, various classes, interfaces, and relationships are implemented. During the implementation, there may be a need to change the codes in different parts of the system. Choosing appropriate names for methods and their implementation are the next steps that should be considered while implementing a design pattern.

在下一步中,将通过现有的代码示例来研究实现设计模式的方法。然后,我们将考虑到问题和未来的业务,为每个涉及的元素选择合适的名称。名称的选择应根据即将到来的业务中每个元素的目的进行。选择名称后,将实现各种类、接口和关系。在实施过程中,可能需要更改系统不同部分的代码。为方法及其实现选择合适的名称是实现设计模式时应考虑的下一步。

Figure 1.10: Choosing Design Pattern Process

图 1.10.选择 Design Pattern Process(设计模式流程)

.NET

In 2002, Microsoft released .NET Framework, a development platform for creating Windows apps. Today .NET Framework is at version 4.8 and remains fully supported by Microsoft. In 2014, Microsoft introduced .NET Core as a cross-platform, open-source successor to .NET Framework. This new implementation of .NET kept the name .NET Core through version 3.1. The next version was named .NET 5. The new versions continue to be released annually, with each version number higher. They include significant new features and often enable new scenarios.

2002 年,Microsoft 发布了 .NET Framework,这是一个用于创建 Windows 应用程序的开发平台。目前,.NET Framework 的版本为 4.8,并且仍然受到 Microsoft 的完全支持。2014 年,Microsoft 推出了 .NET Core 作为 .NET Framework 的跨平台开源后继产品。此 .NET 的新实现在版本 3.1 之前一直保留名称 .NET Core。下一个版本被命名为 .NET 5。新版本每年都会继续发布,每个版本号都更高。它们包括重要的新功能,并且通常支持新方案。

There are multiple variants of .NET, each supporting a different type of app. The reason for multiple variants is part historical and technical.

.NET 有多种变体,每种变体都支持不同类型的应用程序。多个变体的原因部分是历史和技术方面的。

.NET implementations (historical order):

.NET 实现 (历史顺序):

-

.NET Framework: It provides access to the broad capabilities of Windows and Windows Server. Also extensively used for Windows-based cloud computing. The original .NET.

.NET Framework:它提供对 Windows 和 Windows Server 的广泛功能的访问。也广泛用于基于 Windows 的云计算。原始 .NET. -

Mono: A cross-platform implementation of .NET Framework. The original community and open-source .NET used for Android, iOS, and Wasm apps.

Mono:.NET Framework 的跨平台实现。用于 Android、iOS 和 Wasm 应用程序的原始社区和开源 .NET。 -

.NET (Core): A cross-platform and open-source implementation of .NET, rethought for the cloud age while remaining significantly compatible with the .NET Framework. Used for Linux, macOS, and Windows apps.

.NET(核心):.NET 的跨平台开源实现,针对云时代进行了重新思考,同时保持与 .NET Framework 的显著兼容性。用于 Linux、macOS 和 Windows 应用程序。

According to the Microsoft .NET website, it is a free, cross-platform, open-source developer for building many different types of applications. With .NET, you can use multiple languages, editors, and libraries to build for web, mobile, desktop, games, IoT, and more. You can write .NET apps in C#, F#, or Visual Basic. C# is a simple, modern, object-oriented, and type-safe programming language. F# is a programming language that makes it easy to write succinct, robust, and performant code. Visual Basic is an approachable language with a simple syntax for building type-safe, object-oriented apps.

根据 Microsoft .NET 网站,它是一个免费的、跨平台的开源开发人员,用于构建许多不同类型的应用程序。借助 .NET,您可以使用多种语言、编辑器和库来构建 Web、移动、桌面、游戏、IoT 等。您可以使用 C#、F# 或 Visual Basic 编写 .NET 应用程序。C# 是一种简单、现代、面向对象且类型安全的编程语言。F# 是一种编程语言,可以轻松编写简洁、可靠且高性能的代码。Visual Basic 是一种易于使用的语言,具有简单的语法,用于构建类型安全、面向对象的应用程序。

Whether you are working in C#, F#, or Visual Basic, the code will run natively on any compatible operating system. You can build many types of apps with .NET. Some are cross-platform and target a specific set of operating systems and devices.

无论您是使用 C#、F# 还是 Visual Basic,代码都可以在任何兼容的作系统上本地运行。您可以使用 .NET 构建多种类型的应用程序。有些是跨平台的,面向一组特定的作系统和设备。

.NET provides a standard set of base class libraries and APIs that are common to all .NET applications. Each app model can also expose additional APIs that are specific to the operating systems it runs on and the capabilities it provides. For example, ASP.NET is a cross-platform web framework that provides additional APIs for building web apps that run on Linux or Windows.

.NET 提供了一组标准的基类库和 API,这些库和 API 是所有 .NET 应用程序通用的。每个应用程序模型还可以公开特定于其运行的作系统及其提供的功能的其他 API。例如,ASP.NET 是一个跨平台的 Web 框架,它提供其他 API 来构建在 Linux 或 Windows 上运行的 Web 应用程序。

.NET helps you develop high-quality applications faster. Modern language constructs like generics, Language Integrated Query (LINQ), and asynchronous programming make developers productive. Combined with the extensive class libraries, common APIs, multi-language support, and the powerful tooling provided by the Visual Studio family, it is the most productive platform for developers.

.NET 可帮助您更快地开发高质量的应用程序。泛型、语言集成查询 (LINQ) 和异步编程等现代语言结构使开发人员能够提高工作效率。结合 Visual Studio 系列提供的大量类库、通用 API、多语言支持和强大的工具,它是开发人员最高效的平台。

.NET 7, the successor to .NET 6, is Microsoft .NET’s latest version which is built for modern cloud-native apps, mobile clients, edge services, and desktop technologies. Creates mobile experiences using a single codebase without compromising native performance using .NET MAUI.

.NET 7 是 .NET 6 的继任者,是 Microsoft 。NET 的最新版本,专为现代云原生应用程序、移动客户端、边缘服务和桌面技术而构建。使用 .NET MAUI 使用单个代码库创建移动体验,而不会影响本机性能。

.NET apps and libraries are built from source code and project files using the .NET CLI or an Integrated Development Environment (IDE) like Visual Studio.

.NET 应用程序和库是使用 .NET CLI 或集成开发环境 (IDE)(如 Visual Studio)从源代码和项目文件构建的。

The following example is a minimal .NET app:

以下示例是一个最小的 .NET 应用程序:

Project file:

项目文件:

<Project Sdk="Microsoft.NET.Sdk">

<PropertyGroup>

<OutputType>Exe</OutputType>

<TargetFramework>net7.0</TargetFramework>

</PropertyGroup>

</Project>Source Code:

源代码:

Console.WriteLine("Welcome to .NET 7 Design Patterns, in Depth!");The app can be built and run with the .NET CLI:

可以使用 .NET CLI 构建和运行该应用程序:

% dotnet runIt can also be built and run as two separate steps. The following example is for an app that is named app:

它还可以作为两个单独的步骤构建和运行。以下示例适用于名为 app 的应用程序:

% dotnet build

% ./bin/Debug/net6.0/appAccording to the Microsoft .NET website, new versions are released annually in November. .NET released in odd-numbered years are Long-Term Support (LTS) and are supported for three years. Versions that are released in even-numbered years are Standard-Term Support (STS) and are kept for 18 months. The quality level, breaking change policies, and all other aspects of the releases are the same. The .NET Team at Microsoft works collaboratively with other organizations such as Red Hat (for Red Hat Enterprise Linux) and Samsung (for Tizen Platform) to distribute and support .NET in various ways.

根据 Microsoft .NET 网站,新版本每年 11 月发布。奇数年发布的 .NET 是长期支持 (LTS),支持期限为三年。在偶数年发布的版本是标准期限支持 (STS),保留 18 个月。质量级别、中断性变更策略和版本的所有其他方面都是相同的。Microsoft 的 .NET 团队与其他组织合作,例如 Red Hat(用于 Red Hat Enterprise Linux)和 Samsung(用于 Tizen 平台),以各种方式分发和支持 .NET。

Introduction to object orientation in .NET

.NET中的面向对象简介

An object in the real world is a thing. For example, John's car, Paul's mobile, Sara's table, and so on are all objects in the real world. There is a similar view in the programming world where an object is a representation of something in the real world. For example, Tom's bank account in the financial software is the same representative of Tom's bank account in the real world. Dealing with the details of object orientation and object-oriented programming is beyond the scope of this chapter, but in the following, we will get to know some important concepts of object orientation.

现实世界中的对象是一个事物。例如,John 的汽车、Paul 的移动设备、Sara 的桌子等等都是现实世界中的对象。在编程世界中也有类似的观点,其中对象是现实世界中某物的表示。例如,Tom 在财务软件中的银行账户与现实世界中 Tom 的银行账户是同一个代表。处理面向对象和面向对象编程的细节超出了本章的范围,但在下文中,我们将了解面向对象的一些重要概念。

In the C# programming language, the class or struct keywords are used to define the type of an object that is actually the outline and format of the object. Object orientation has a series of main and fundamental concepts that are briefly discussed in the following:

在 C# 编程语言中,class 或 struct 关键字用于定义对象的类型,该类型实际上是对象的轮廓和格式。面向对象具有一系列主要和基本概念,下面将简要讨论这些概念:

Encapsulation: Deals directly with the data and methods associated with the object. By using encapsulation, we control access to data and methods and assert how the internal state of an object can be changed.

封装:直接处理与对象关联的数据和方法。通过使用封装,我们可以控制对数据和方法的访问,并断言如何更改对象的内部状态。

public class DemoEncap

{

private int studentAge;

// You can access the field only by using the following methods.

//So, this field is encapsulated & access to it, is controlled

public int Age

{

get => studentAge;

set => studentAge = value;

}

}Composition: Describes what an object is made of. For example, a car consists of four wheels.

构图:描述对象的构成。例如,一辆汽车由四个轮子组成。

Aggregation: States what things can be mixed with the object. For example, a human is not part of a car, but a human can sit inside the car and try to drive.

聚合:说明哪些内容可以与对象混合。例如,人类不是汽车的一部分,但人类可以坐在车内并尝试驾驶。

Inheritance: By using inheritance, existing codes can be reused. This reuse happens in the form of defining a child class based on the parent class. In this case, all access methods and features of the parent class are available to the child class. Also, with the help of inheritance, you can develop the capabilities of the parent class. When using inheritance, two types of casting can occur.

继承:通过使用继承,可以重用现有代码。这种重用以基于父类定义子类的形式发生。在这种情况下,父类的所有访问方法和功能都可供子类使用。此外,在继承的帮助下,您可以开发父类的功能。使用继承时,可能会发生两种类型的强制转换。

Implicit casting: Means to store the child class object in a parent class variable

隐式强制转换:表示将子类对象存储在父类变量中

Explicit casting: In this type of casting, the type of destination should be stated explicitly. In this method, there is a possibility of an exception, so it is better to check whether Casting can be done or not by using the keyword before doing Casting.

显式强制转换:在这种类型的强制转换中,应显式说明目标的类型。在这种方法中,有出现异常的可能,所以最好在做 Casting 之前,先用关键词检查一下是否可以做 Casting。

Abstraction: By using abstraction, the main idea of the object is identified, and the details are ignored. The child classes have the chance to implement the details based on their own problem space. In C# language, you can use the abstract keyword to define an abstract class or method, which is usually considered as classes that continue to implement the introduced abstractions using the inheritance of child classes. The volume and extent of abstraction of a class are important points that should be taken into account. The more abstract the class, the more we can use it, but there will be less code to share.

抽象:通过使用抽象,可以识别对象的主体思想,忽略细节。子类有机会根据自己的问题空间实现细节。在 C# 语言中,可以使用 abstract 关键字定义抽象类或方法,该类或方法通常被视为使用子类的继承继续实现引入的抽象的类。类的抽象量和范围是应该考虑的重要点。类越抽象,我们可以使用它就越多,但要共享的代码会更少。

Polymorphism: By using polymorphism, the child class has the ability to change the implementation of its parent class. In order to change the parent class, the child class can change the implementation of the method using the override keyword in the C# programming language. In order for the implementation of the method to be changeable, the parent class must define the method as virtual. The members that are defined as abstract in the parent class will use the override keyword in the child class for implementation. If a method is defined in the parent class and a method with the same signature is defined in the child class, it is said that the process is hidden (Method Hiding). This type of inheritance is called non-polymorphic inheritance. In order to define this type of method, the new keyword can be used, although the use of this keyword is optional.

多态性:通过使用多态性,子类能够更改其父类的实现。为了更改父类,子类可以使用 C# 编程语言中的 override 关键字更改方法的实现。为了使方法的实现是可更改的,父类必须将方法定义为 virtual。在父类中定义为 abstract 的成员将使用子类中的 override 关键字进行实现。如果在父类中定义了方法,并且在子类中定义了具有相同签名的方法,则称该进程是隐藏的(方法隐藏)。这种类型的继承称为非多态继承。为了定义这种类型的方法,可以使用 new 关键字,尽管此关键字的使用是可选的。

P class A

{

public void Print() => Console.WriteLine("I am Parent");

}

public class B: A

{

public new void Print() => Console.WriteLine("I am Child");

}

When we are dealing with a large class, the implementation of the class can be written in several formats. In this case, the class is called partial. A class in C# can have different members, including the following:

当我们处理一个大型类时,类的实现可以用多种格式编写。在这种情况下,该类称为 partial。C# 中的类可以具有不同的成员,包括:

Field: The field is used to store data. Fields have three different categories:

字段:该字段用于存储数据。字段有三个不同的类别:

Constant: The data that is placed in these types of fields will never change, and the compiler copies the relevant data, where the constants are called.

常量:放置在这些类型的字段中的数据永远不会更改,编译器会复制调用常量的相关数据。

For example, consider the following code:

例如,请考虑以下代码:

public class A

{

public const string SampleConst = ".NET Design Patterns";

}

public class B

{

public B()

{

string test = A.SampleConst;

}

}After compiling the code, the compiler will generate the following code: (The generated IL code is captured by ILSpy software)

编译代码后,编译器会生成如下代码:(生成的 IL 代码被 ILSpy 软件捕获)

public class A

{

public const string SampleConst = ".NET Design Patterns";

}

public class B

{

public B()

{

string test =".NET Desig Patterns";

}

}As you can see, the compiler copies the value of SampleConst wherever the constant is used.

如您所见,编译器会在使用常量的位置复制 SampleConst 的值。

Read Only: The data in these types of fields cannot be changed after creating the object.

只读:创建对象后,无法更改这些类型字段中的数据。

Event: In these types of fields, the available data is actually a reference to one or more methods that are supposed to be executed when a specific event occurs.

事件:在这些类型的字段中,可用数据实际上是对一个或多个方法的引用,这些方法应该在特定事件发生时执行。

Method: These are used to execute expressions. The method defines and implements the expected behavior of the object. It has a name, input parameters, and output type. If two methods have the same name but different input parameters, they are said to be overloaded. Methods also have four different types:

方法:这些用于执行表达式。该方法定义并实现对象的预期行为。它具有名称、输入参数和输出类型。如果两个方法具有相同的名称但不同的输入参数,则称它们被重载。方法也有四种不同的类型:

Constructor: The constructor allocates memory to the object and initializes it. When the new keyword is used in the C# programming language, the associated constructor will be executed.

构造函数:构造函数为对象分配内存并对其进行初始化。在 C# 编程语言中使用 new 关键字时,将执行关联的构造函数。

Finalizer: These methods, also called destructors, are rarely used in the C# language. During execution, when an object is disposing and reclaiming memory, then these types of methods are executed.

终结器:这些方法也称为析构函数,在 C# 语言中很少使用。在执行期间,当对象释放和回收内存时,将执行这些类型的方法。

class Car

{

~Car() // finalizer

{

// cleanup statements...

}

}In the preceding code, the Finalizer implicitly calls the Finalize method in the Object class. So, calling Finalizer will result in calling the following manner:

在上面的代码中,Finalizer 隐式调用 Object 类中的 Finalize 方法。因此,调用 Finalizer 将导致以下方式调用:

protected override void Finalize()

{

try

{

// Cleanup statements...

}

finally

{

base.Finalize();

}

}Property: Statements in this type of method will be executed while setting or reading data. Behind the scenes of property, data is usually stored in Fields. There is no requirement for this purpose, and the data can be stored in an external data source or calculated during execution. Usually, the Property can be used for field encapsulation.

Property:在设置或读取数据时,将执行此类方法中的语句。在属性的幕后,数据通常存储在 Fields 中。没有此目的的要求,数据可以存储在外部数据源中或在执行期间进行计算。通常,Property 可用于字段封装。

public string FirstName { get; set; }Indexer: The expressions in this type of method are executed using “[]” indicator when setting or receiving data

索引器:在设置或接收数据时,此类方法中的表达式使用 “[]” 指示符执行

class StringDataStore

{

private string[] strArr = new string[10]; // internal data storage

public string this[int index]

{

get => strArr[index];

set => strArr[index] = value;

}

}Operator: The expressions in this type of method are executed when operators like + are used on class operands.

运算符:当对类作数使用类似 + 的运算符时,将执行此类方法中的表达式。

public static Box operator + (Box b, Box c) {

Box box = new Box();

box.length = b.length + c.length;

box.breadth = b.breadth + c.breadth;

box.height = b.height + c.height;

return box;

}Apart from the preceding code, a class also contains an inner class:

除了前面的代码外,类还包含一个内部类:

public class A{

public string GetName()=> $“Vahid is {new B().GetAge()} years old”;

private class B{

public int GetAge()=>10;

}

}Regardless of the members of a class, part of encapsulation is to assign appropriate access levels to the class or its members. In C# language, there are different access levels which are:

无论类的成员如何,封装的一部分都是为类或其成员分配适当的访问级别。在 C# 语言中,有不同的访问级别,它们是:

-

Public: Members with this access level are available everywhere.

公共:具有此访问级别的成员在任何地方都可用。 -

Private: Members with this access level are only available inside the class. This access level is the default for class members.

Private:具有此访问级别的成员只能在类内使用。此访问级别是类成员的默认访问级别。 -

Protected: Members with this access level are only available inside the class, and inside classes are derived from this class.

受保护:具有此访问级别的成员仅在类内部可用,并且内部类派生自此类。 -

Internal: Members with this access level are only available inside the same assembly.

内部:具有此访问级别的成员仅在同一程序集中可用。 -

Internal protected: Members with this access level are available within the same class, assembly, or classes derived from this class. This access is internal or protected.

Internal protected:具有此访问级别的成员在同一个类、程序集或从此类派生的类中可用。此访问权限是内部访问权限或受保护访问权限。 -

Private protected: Members with this access level are available within the same class or classes derived within the same assembly. This access is internal and protected.

Private protected:具有此访问级别的成员在同一类或同一程序集中派生的类中可用。此访问权限是内部的,并且受到保护。

In addition to access levels, C# language also has a series of Modifiers through which you can slightly change the definition of the class or its members. For example, using sealed makes it impossible to inherit from a class or override a method. When a class is defined as closed, extension methods can be used to expand its capabilities.

除了访问级别之外,C# 语言还具有一系列修饰符,通过这些修饰符可以稍微更改类或其成员的定义。例如,使用 sealed 使得无法从类继承或重写方法。当类定义为 closed 时,可以使用扩展方法来扩展其功能。

When the class is defined statically, it is no longer possible to create an instance, and the class is always available to everyone. Also, the abstract is a modifier, when applied to a class, turns the class into an abstract class. When it is attributed to other members, such as methods, it eliminates the possibility of providing an implementation, and child classes are required to provide implementations.

当类是静态定义的时,就不再可能创建实例,并且该类始终可供所有人使用。此外,抽象是一个修饰符,当应用于类时,会将类转换为抽象类。当它归属于其他成员(如方法)时,它消除了提供实现的可能性,并且需要子类来提供实现。

Along with classes in C#, there are interfaces that are very similar to abstract classes. All members of interfaces are abstract. Among the similarities between the abstract class and interface, it can be mentioned that neither can be sampled. Along with all the similarities, they also have differences, including the following:

除了 C# 中的类外,还有一些与抽象类非常相似的接口。接口的所有成员都是抽象的。在抽象类和接口之间的相似之处中,可以提到两者都不能采样。除了所有相似之处外,它们也有不同之处,包括:

-

Interfaces can only inherit from interfaces, while abstract classes can inherit from other classes and implement different interfaces.

接口只能继承自接口,而抽象类可以继承自其他类并实现不同的接口。 -

Abstract classes can include constructors and destructors, while this possibility is not available for interfaces

抽象类可以包含构造函数和析构函数,但这种可能性不适用于接口

Since C# version 8, interfaces can have default implementations for methods, just like abstract classes.

从 C# 版本 8 开始,接口可以具有方法的默认实现,就像抽象类一样。

public interface IPlayable

{

void Play();

void Pause();

void Stop() // default implementation 默认实现

{

WriteLine("Default implementation of Stop.");

}

}In fact, interfaces are a way to connect to each other. When a class implements an interface, it guarantees to provide a set of capabilities. The use of interfaces and abstract classes is very widely used in design patterns.

事实上,接口是一种相互连接的方式。当类实现接口时,它保证提供一组功能。接口和抽象类的使用在设计模式中得到了非常广泛的应用。

Object orientation SOLID principles

面向对象 SOLID 原则

C# programming language is an object-oriented language that provides good facilities for using object-oriented capabilities. Features such as the use of interfaces, inheritance, polymorphism, and so on. The fact that the C# programming language provides such facilities does not guarantee that every code written is by object-oriented principles and has an acceptable quality. Ideally, reaching an appropriate and correct object-oriented design in an extensive system will be challenging and require much scrutiny and precision.

C# 编程语言是一种面向对象的语言,它为使用面向对象的功能提供了良好的工具。功能,例如使用接口、继承、多态性等。C# 编程语言提供此类工具这一事实并不能保证编写的每段代码都遵循面向对象原则并具有可接受的质量。理想情况下,在一个广泛的系统中实现适当和正确的面向对象设计将具有挑战性,并且需要大量的审查和精确性。

Various principles have been introduced to produce the system according to the correct principles and guidelines of object orientation. One of these principles is the SOLID principle. SOLID actually consists of five different principles, which are:

已经引入了各种原则,以根据面向对象的正确原则和准则来生成系统。这些原则之一是 SOLID 原则。SOLID 实际上由五个不同的原则组成,它们是:

-

Single Responsibility Principle (SRP)

单一责任原则 (SRP) -

Open/Close Principle (OCP)

开/关原则 (OCP) -

Liskov Substitution Principle (LSP)

里斯科夫替代原则 (LSP) -

Interface Segregation Principle (ISP)

接口分离原则 (ISP) -

Dependency Inversion Principle (DSP)

依赖关系倒置原则 (DSP)

The title SOLID also consists of the first letters of each of the preceding five principles. These principles help the written code to be of good quality and to maintain the code at an acceptable level. In the following, each of these principles is explained:

标题 SOLID 也由上述五个原则中每个原则的首字母组成。这些原则有助于编写的代码具有良好的质量,并将代码保持在可接受的水平。下面将解释这些原则中的每一个:

Single Responsibility Principle

单一责任原则

This principle states that each class should have only one task, which by nature will have one reason to change the class. When this principle is not followed, a class will contain a large amount of code to be changed if there is a need in the system. Making changes to this class will lead to the re-execution of the tests. On the other hand, by observing this principle, a big problem is divided into several smaller problems, and each issue is implemented in the form of a class. Therefore, making changes in the system will lead to making changes in one of these small classes, and it will only be necessary to run the tests related to this small class again. The principle of SRP is very similar to the principle in object orientation called SoC1.

该原则指出,每个类应该只有一个任务,而该任务本质上只有一个更改类的理由。如果不遵循此原则,如果系统有需要,一个类将包含大量需要更改的代码。对此类进行更改将导致重新执行测试。另一方面,通过遵守这个原则,一个大问题被分成几个小问题,每个问题都以类的形式实现。因此,在系统中进行更改将导致对其中一个小类进行更改,并且只需要再次运行与该小类相关的测试即可。SRP 的原理与面向对象的原理非常相似,称为 SoC1。

For example, consider the following code:

例如,请考虑以下代码:

public class WrongSRP

{

public string FirstName { get; set; }

public string LastName { get; set; }

public string Email { get; set; }

public static List<WrongSRP> Users { get; set; } = new List<WrongSRP>();

public void NewUser(WrongSRP User)

{

Users.Add(User);

SendEmail(User.Email, "Account Created", "Your new account created");

}

public void SendEmail(string email, string subject, string body)

{

//Send email

}

}Suppose it is requested to design and implement a mechanism to create a new user. It is necessary to send an email after creating a user account. The preceding code has two methods called NewUser to create a new user and SendEmail to send an email. There are two different behaviors in the same class that are not directly related to each other. In other words, sending an e-mail is not directly related to the user entity, and the presence of this method in this class violates the SRP principle. Because this class is no longer responsible for only one task, and apart from managing user-related requests, it is also responsible for sending emails. The preceding design will cause the codes to change if the email-sending process changes. For example, the email service provider changes. In order to modify this code, the preceding code can be rewritten as follows:

假设请求设计和实现一种机制来创建新用户。创建用户帐户后,需要发送电子邮件。上述代码有两个方法,分别称为 NewUser 来创建新用户,另一个方法称为 SendEmail 来发送电子邮件。同一类中有两种不同的行为,它们彼此之间没有直接关系。换句话说,发送电子邮件与用户实体没有直接关系,并且此类中存在此方法违反了 SRP 原则。因为这个类不再只负责一个任务,除了管理与用户相关的请求外,它还负责发送电子邮件。如果电子邮件发送过程发生变化,上述设计将导致代码发生变化。例如,电子邮件服务提供商会发生变化。为了修改此代码,可以按如下方式重写上述代码:

public class SRP

{

public string FirstName { get; set; }

public string Email { get; set; }

public string LastName { get; set; }

public static List<WrongSRP> Users { get; set; } = new List<WrongSRP>();

public void NewUser(WrongSRP User)

{

Users.Add(User);

new EmailService()

.SendEmail(User.Email,"Account Created","Your new account created");

}

}

public class EmailService

{

public void SendEmail(string email, string subject, string body)

{

//Send email

}

}As can be seen in the preceding code, the task of sending emails has been transferred to the EmailService class, and with this rewrite, the SRP principle has been respected, and it will not have the problems of the previous code.

从前面的代码中可以看出,发送邮件的任务已经转移到了 EmailService 类,通过这次重写,尊重了 SRP 原则,不会有之前代码的问题。

Open/Close Principal

开/关原则 (OCP)

This principle states that a class should be open for extension and closed for modification. In other words, when a class is implemented, and other parts of the system start using this class, it should not be changed. It is clear that making changes in this class can cause problems in the parts of the system. If there is a need to add new capabilities to the class, these should be added to it by expanding the class. In this case, the parts of the system that uses this class will not be affected by the applied changes, and in order to test new codes, only new parts will be needed to be tested.

此原则指出,类应为 open for extension,shut for modification。换句话说,当实现一个类,并且系统的其他部分开始使用这个类时,它不应该被改变。很明显,在此类中进行更改可能会导致系统的某些部分出现问题。如果需要向类添加新功能,则应通过扩展类来将这些功能添加到类中。在这种情况下,使用此类的系统部分将不会受到应用的更改的影响,并且为了测试新代码,只需要测试新部分。

For example, suppose you are asked to write a class to calculate employee salaries. In the initial plan of this requirement, it is stated that the working hours of all employees must be multiplied by 1000, and this way, salaries are calculated. With this explanation, the following code is written:

例如,假设您被要求编写一个类来计算员工工资。在此要求的初始计划中,规定所有员工的工作时间必须乘以 1000,这样就可以计算出工资。通过此说明,编写了以下代码:

public class WrongOCP

{

public string Name { get; set; }

public decimal CalculateSalary(decimal hours) => hours * 1000;

}The preceding code has a method called CalculateSalary which calculates the salary of each person by receiving the working hours. After this code has been used for some time, it is said that a new type of employee called a manager has been defined in the system. For them, the working hours should be multiplied by 1500, and for others, it should be multiplied by 1000. Therefore, to cover this need, we change the preceding code as follows:

前面的代码有一个名为 CalculateSalary 的方法,它通过接收工作时间来计算每个人的工资。此代码使用一段时间后,据说系统中定义了一种称为经理的新型员工。对他们来说,工作时间应该乘以 1500,对其他人来说,应该乘以 1000。因此,为了满足这一需求,我们按如下方式更改了前面的代码:

public class WrongOCP

{

public string Name { get; set; }

public string UserType { get; set; }

public decimal CalculateSalary(decimal hours)

{

if (UserType == "Manager")

return hours * 1500;

return hours * 1000;

}

}To add this new feature to the class, we changed the existing code, and this violates the OCP principle. By making these changes in the class, all parts of the system that use this class will be affected. To cover the requirement raised in the form of the original OCP, the preceding code can be rewritten as follows:

为了将这个新功能添加到类中,我们更改了现有代码,这违反了 OCP 原则。通过在类中进行这些更改,使用此类的系统的所有部分都将受到影响。为了涵盖以原始 OCP 形式提出的要求,可以按如下方式重写前面的代码:

public abstract class OCP

{

protected OCP(string name) => Name = name;

public string Name { get; set; }

public abstract decimal CalculateSalary(decimal hours);

}

public class Manager : OCP

{

public Manager(string name) : base(name) { }

public override decimal CalculateSalary(decimal hours) => hours * 1500;

}

public class Employee : OCP

{

public Employee(string name) : base(name) { }

public override decimal CalculateSalary(decimal hours) => hours * 1000;

}In the preceding code, if we want to add the role of a consultant, for example, it is enough to create a new class for the consultant and define the process of calculating his salary without touching the existing codes. With these words, new functionality is added without changing the current codes.

在上面的代码中,例如,如果我们想添加顾问的角色,只需为顾问创建一个新类并定义计算其薪水的过程就足够了,而无需触及现有代码。使用这些词,可以在不更改当前代码的情况下添加新功能。

Liskov Substitution Principle

里斯科夫替代原则 (LSP)

This principle states that the objects of the child class should be able to replace the parent class so there is no change in the final result. To make the matter clear, let us assume that we are asked to design an infrastructure through which the contents of various files can be read and written to these files. It is also stated that a message should be displayed to the user before reading and writing in text files. For this purpose, the following code can be considered:

该原则指出,子类的对象应该能够替换父类,因此最终结果没有变化。为了清楚地说明这个问题,让我们假设我们被要求设计一个基础设施,通过该基础设施,可以读取和写入各种文件的内容。还指出,在读取和写入文本文件之前,应向用户显示一条消息。为此,可以考虑以下代码:

public class FileManager

{

public virtual void Read()=> Console.WriteLine("Reading from file...");

public virtual void Write()=> Console.WriteLine("Writting to file...");

}

public class TextFileManager : FileManager

{

public override void Read()

{

Console.WriteLine("Reading text file...");

base.Read();

}

public override void Write()

{

Console.WriteLine("Writting to text file...");

base.Write();

}

}After some time, it is stated that the possibility of writing in XML files will be removed, and there is no need to present the writing behavior for XML files to the user. With these conditions, the preceding code changes as the following:

一段时间后,声明将消除写入 XML 文件的可能性,并且无需向用户提供 XML 文件的写入行为。在这些条件下,前面的代码将更改如下:

public class FileManager

{

public virtual void Read() => Console.WriteLine("Reading from file...");

public virtual void Write() => Console.WriteLine("Writting to file...");

}

public class TextFileManager : FileManager

{

public override void Read()

{

Console.WriteLine("Reading from text file...");

base.Read();

}

public override void Write()

{

Console.WriteLine("Writting to text file...");

base.Write();

}

}

public class XmlFileManager : FileManager

{

public override void Write()=> throw new NotImplementedException();

}Now that the preceding class has been added for XmlFileManager, the following problem appears:

现在,已为 XmlFileManager 添加了前面的类,此时会出现以下问题:

FileManager fm = new XmlFileManager();

fm.Read();

fm.Write();// Runtime error 运行时错误In the preceding code, when we want to call the Write method, we will encounter a NotImplementedException error, so it is not possible to replace the child class object, that is, the XmlFileManager class object, with the parent class object, that is, the FileManager class, and this replacement will change the final result. Because if we worked only with the parent class in the preceding code (FileManager fm = new FileManager()), a result would be obtained. In this case, the LSP principle is violated.